Most marketers approach free trials the same way they approach buffets—eyes bigger than their stomachs, sampling everything, mastering nothing. You sign up with genuine enthusiasm, click through a few features, maybe build a test campaign, then life happens. Three days before expiration, you panic-browse the interface trying to remember what impressed you enough to sign up in the first place.

Here's the reality: A Facebook ad builder free trial isn't a casual browsing experience. It's a compressed evaluation period where you're essentially auditioning both the platform and your own workflow needs. The difference between marketers who make confident purchasing decisions and those who let trials expire unused comes down to strategy, not time.

The challenge is real. You're already managing active campaigns, client deadlines, and the daily chaos of digital marketing. Adding "thoroughly evaluate new software" to that list feels like assigning yourself homework. But the cost of choosing wrong—or worse, staying stuck in inefficient workflows—compounds every single month.

This guide delivers a tactical framework for extracting genuine value from any Facebook ad builder trial period. You'll learn how to set measurable evaluation criteria before you even click "Start Trial," test features that actually matter for your workflow, and calculate real ROI using time-tracking data instead of hopeful guesses. Whether you're a solo consultant managing multiple client accounts or an agency leader scaling ad operations across your team, these strategies ensure your trial period generates actionable insights rather than vague impressions.

1. Map Your Current Pain Points Before Day One

The Challenge It Solves

Starting a trial without documented baseline metrics is like shopping without a list—you'll be distracted by shiny features that don't address your actual problems. Most marketers begin trials in reactive mode, exploring whatever catches their attention rather than systematically testing solutions to specific workflow bottlenecks.

This scattered approach makes objective comparison impossible. Without documented pain points, you can't measure whether a platform actually solves your problems or just looks impressive in demos.

The Strategy Explained

Spend 30-60 minutes before activating your trial documenting your current advertising workflow with brutal honesty. Create a simple spreadsheet tracking how long each campaign phase actually takes—not how long you think it should take, but real measured time from brief to launch.

Identify your top three workflow frustrations. Is it the tedious copy-paste work when launching ad variations? The time spent manually testing audience combinations? The back-and-forth with designers for creative assets? Be specific about what makes you want to throw your laptop out the window.

Set quantifiable success criteria. Instead of vague goals like "make campaign building easier," write measurable targets: "Reduce campaign setup time from 45 minutes to under 15 minutes" or "Launch 10 ad variations in the time it currently takes to launch 3."

Implementation Steps

1. Time-track your next 2-3 campaign builds using a simple timer app, noting each phase: research, audience setup, ad creation, review, launch.

2. Write down the three specific moments in your workflow where you think "there has to be a better way" most frequently.

3. Create a one-page evaluation scorecard listing your must-have features, nice-to-have features, and deal-breakers before starting any trial.

Pro Tips

Share your evaluation criteria with your team before the trial starts. Their input often reveals workflow pain points you've normalized and stopped noticing. Keep this document open during your entire trial period and reference it daily to stay focused on features that actually matter for your specific use case.

2. Test the Campaign Builder with a Real Project

The Challenge It Solves

Demo environments and sample campaigns create a false sense of capability. The platform's tutorial examples use perfect inputs—clean audience data, professional creative assets, straightforward objectives. Your real campaigns involve messy client briefs, limited creative options, and complex targeting requirements.

Testing with fake scenarios means you'll only discover the platform's limitations after you've already committed to a subscription.

The Strategy Explained

Within the first 48 hours of your trial, build an actual campaign you need to launch anyway. Use a real client brief or an upcoming promotion for your own business. This approach reveals how the platform handles your specific workflow quirks, data quality issues, and creative constraints.

Pay attention to friction points during the build process. Does the interface make assumptions about your campaign structure that don't match your strategy? Can you easily customize targeting beyond the platform's suggested audiences? How does it handle multiple ad variations with different creative approaches?

Compare the time investment against your documented baseline. If the platform claims to save time but your first real campaign takes longer than your manual process, that's critical data—though account for the natural learning curve on initial builds.

Implementation Steps

1. Select a real campaign you're planning to launch within the next week as your trial test project.

2. Build the campaign in the trial platform while time-tracking each phase, then compare against your baseline metrics.

3. Document every moment where you thought "I wish it would..." or "Why can't I..." to identify feature gaps that matter for your workflow.

Pro Tips

Don't just build the campaign—actually launch it if possible. Many platforms look impressive until you hit the publish button and discover sync issues, approval delays, or unexpected limitations. The real test of any ad builder is whether campaigns successfully go live and perform as expected in Meta's ecosystem.

3. Stress-Test Bulk Operations Early

The Challenge It Solves

Single campaign builds reveal basic functionality, but your real efficiency gains come from scale operations. If you're launching multiple campaigns simultaneously, testing dozens of audience combinations, or managing ads across multiple client accounts, bulk capabilities determine whether a platform genuinely saves time or just shifts bottlenecks.

Many marketers discover scale limitations only after purchasing, when they attempt to launch their first major campaign initiative.

The Strategy Explained

Dedicate one trial session to pushing the platform's volume limits. Try launching 20+ ad variations at once. Test building campaigns for multiple clients or business units simultaneously. Attempt bulk editing operations on existing campaigns to see how the platform handles mass updates.

This stress test reveals whether the platform's automation actually scales or if it's designed for small-volume users. Watch for performance degradation, interface lag, or feature limitations that only appear at higher volumes.

For platforms with AI-powered campaign builders, test whether the system maintains quality when generating multiple campaigns in rapid succession. Do the seventh and eighth AI-generated campaigns show the same strategic depth as the first two, or does quality decline?

Implementation Steps

1. Create a bulk test scenario matching your typical high-volume needs—whether that's 15 ad variations for one campaign or 5 separate campaigns for different clients.

2. Launch all variations simultaneously and monitor for system performance issues, error messages, or unexpected limitations.

3. Verify that all bulk-created campaigns properly sync to Meta Ads Manager with correct settings and targeting parameters.

Pro Tips

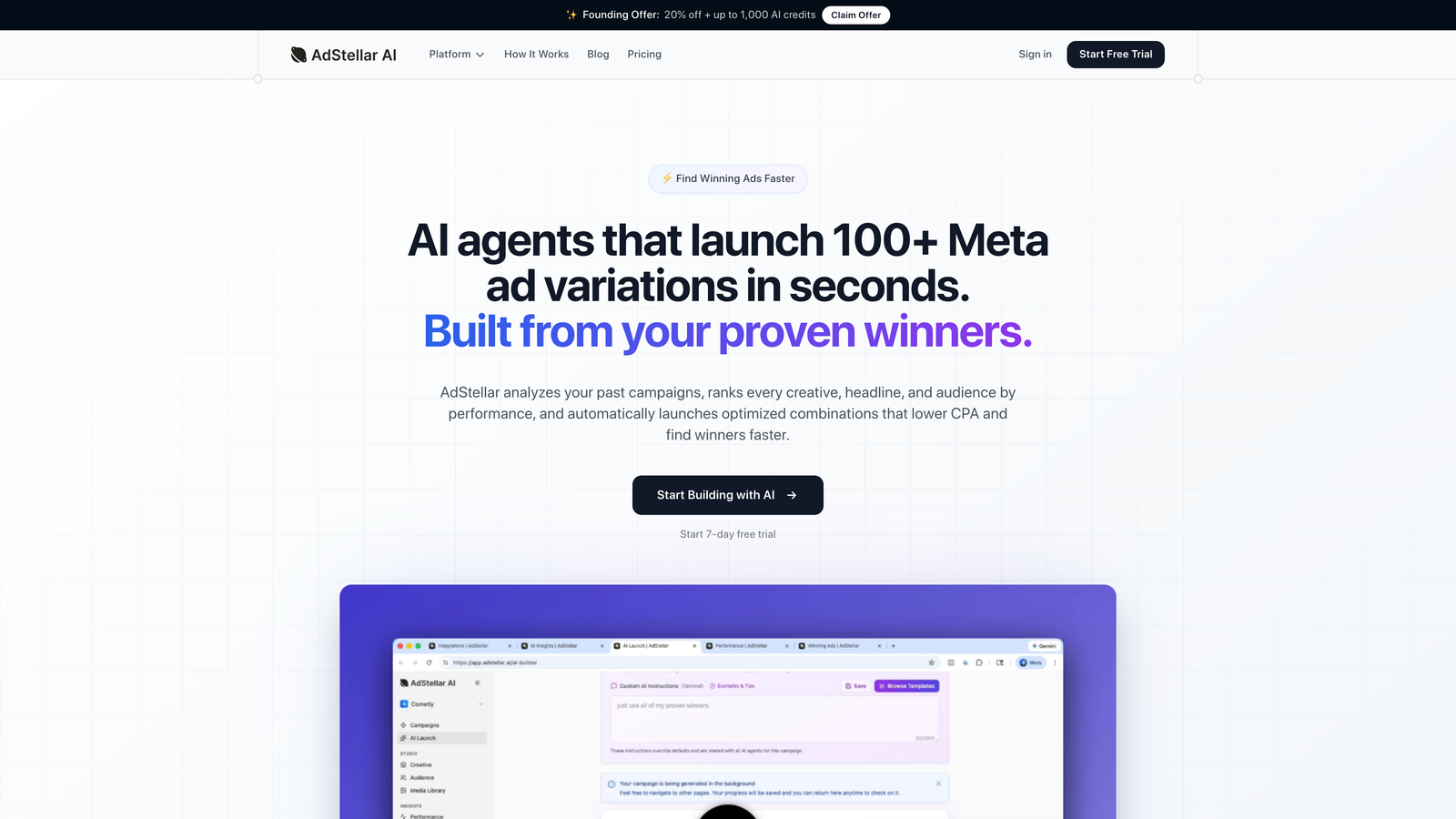

If you're evaluating AI-powered platforms like AdStellar AI with its 7-agent system, test whether the AI maintains consistent strategic reasoning across multiple rapid builds. The platform's ability to analyze historical performance and select winning elements should remain reliable whether you're building one campaign or ten simultaneously. Quality degradation at scale is a red flag regardless of how impressive individual campaign builds appear.

4. Evaluate the Learning Curve and Onboarding Experience

The Challenge It Solves

A powerful platform that your team refuses to use delivers zero value. Many sophisticated ad builders fail at adoption because they prioritize feature depth over usability. If your team can't reach competency within a few hours, the platform will become "that tool we tried once" rather than your primary workflow.

The onboarding experience predicts long-term adoption success more reliably than feature lists.

The Strategy Explained

Track your time-to-competency honestly. How long did it take before you could build a campaign without constantly referencing help documentation? Could you explain the platform's core workflow to a colleague after one day of use? Would you feel confident training a new team member on this tool?

Evaluate the quality of onboarding resources. Are tutorials specific and actionable, or generic and vague? Does the platform offer contextual help when you're stuck, or do you need to leave the interface to search documentation? How responsive is support when you encounter genuine confusion?

If you're evaluating for team use, involve at least one other person during your trial. Their fresh perspective reveals whether your growing comfort comes from genuinely intuitive design or just your personal familiarity with the interface.

Implementation Steps

1. Note the exact time when you start using the platform and when you complete your first campaign without referencing help resources.

2. Test support quality by asking one genuine question through each available channel (chat, email, documentation search) and comparing response times and helpfulness.

3. Invite a colleague to build one campaign using only the platform's onboarding resources, then debrief about their experience and frustration points.

Pro Tips

Beware of platforms that require extensive training before basic competency. While some complexity is justified for enterprise-level tools, if you're still confused after three hours of active use, that complexity will compound across your entire team. The best platforms balance power with accessibility—you should feel capable on day one and discover advanced features gradually rather than feeling overwhelmed immediately.

5. Verify Data Integration and Reporting Accuracy

The Challenge It Solves

A beautiful interface means nothing if the platform can't reliably sync with your Meta account or if its reporting data conflicts with Ads Manager metrics. Data accuracy issues create cascading problems—you make optimization decisions based on incorrect information, lose trust in the platform, and eventually abandon it for manual workflows where you can verify every number.

Many platforms look impressive in demos but reveal sync delays, missing metrics, or data discrepancies only after you've committed to using them for active campaigns.

The Strategy Explained

Within your first few trial days, launch a small test campaign through the platform and immediately verify that all settings transferred correctly to Meta Ads Manager. Check targeting parameters, budget allocations, ad creative, and campaign structure to ensure nothing got lost in translation.

Let the campaign run for at least 24-48 hours, then compare the platform's reported metrics against the numbers in native Ads Manager. Look for discrepancies in spend, impressions, clicks, and conversions. Small differences might be acceptable due to reporting delays, but significant gaps indicate serious integration problems.

Test the platform's ability to pull historical data from your existing campaigns. Can it accurately analyze your past performance to inform future campaign decisions? This capability is especially critical for AI-powered builders that claim to optimize based on historical trends.

Implementation Steps

1. Launch a low-budget test campaign through the trial platform and immediately verify settings in Meta Ads Manager match your intentions.

2. After 48 hours of campaign activity, create a comparison spreadsheet showing key metrics from both the platform and native Ads Manager.

3. Document any discrepancies larger than 5% and ask support to explain the differences before making purchase decisions.

Pro Tips

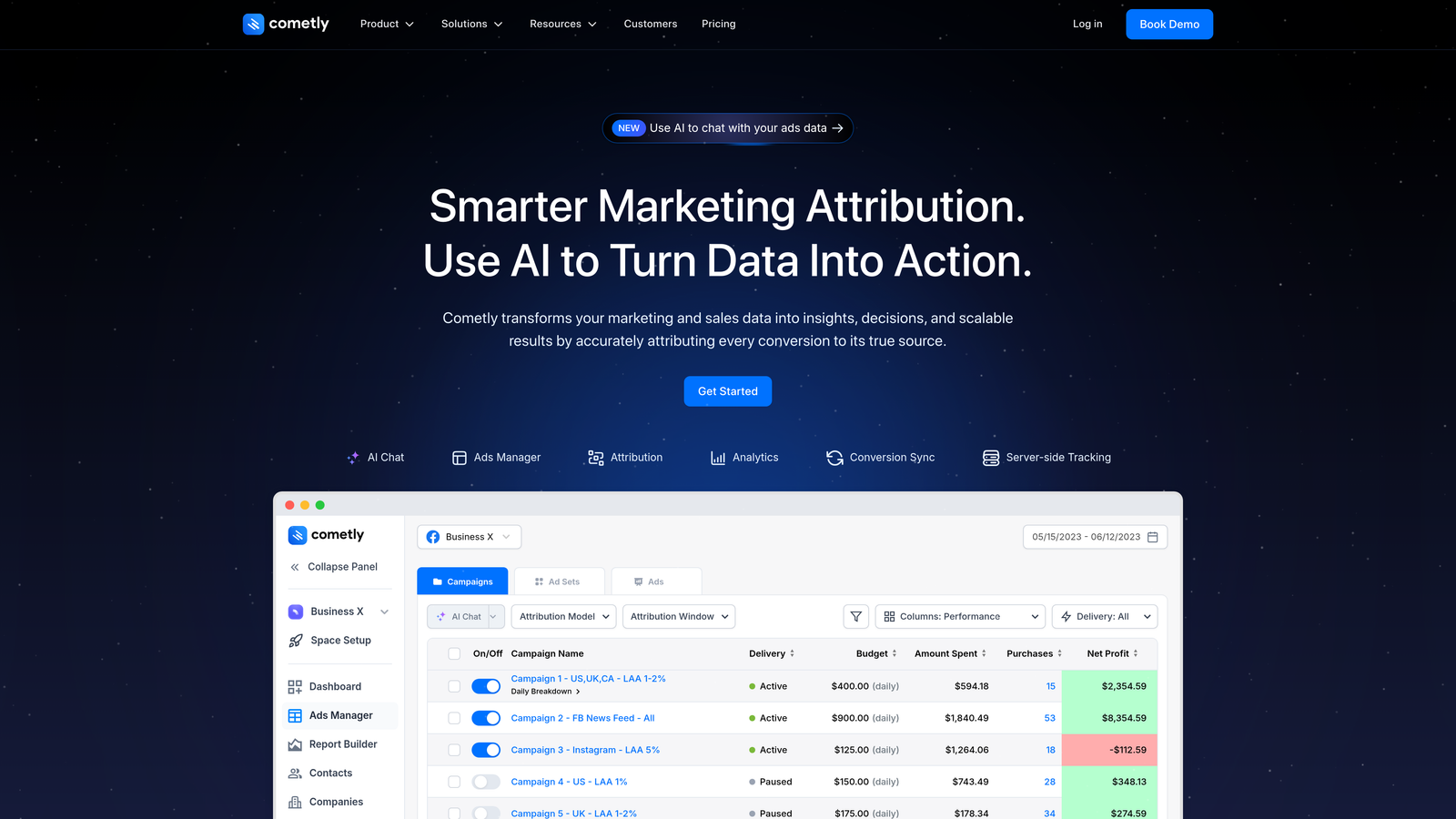

Pay special attention to conversion tracking accuracy if you use custom events or integrate with attribution platforms like Cometly. The platform should correctly attribute conversions to the right campaigns and ad sets without double-counting or missing events. If you can't trust the data during your trial, you definitely can't trust it when managing real budget.

6. Calculate Your Actual ROI Before Trial Ends

The Challenge It Solves

Most purchase decisions rely on gut feeling rather than quantified value. You have a vague sense that the platform "seems helpful" but no concrete data on whether the subscription cost justifies the time savings. This ambiguity leads to either premature commitment or missed opportunities—you buy based on impressive demos or dismiss valuable tools because you didn't measure their actual impact.

Without calculated ROI, you're making a financial decision in the dark.

The Strategy Explained

Use your trial period to generate real ROI numbers based on actual time savings. Compare the hours you invested in campaign builds during the trial against your baseline metrics from manual workflows. If you normally spend 45 minutes building a campaign and the platform reduces that to 15 minutes, you're saving 30 minutes per campaign.

Calculate monthly savings by multiplying per-campaign time savings by your typical campaign volume. If you launch 20 campaigns monthly and save 30 minutes each, that's 10 hours saved per month. Multiply those hours by your hourly rate or opportunity cost to get your dollar value.

Compare your calculated monthly savings against the platform's subscription cost. If you save $1,500 worth of time monthly and the platform costs $200, the ROI is clear. If savings barely exceed the subscription cost, you need to factor in learning curve time and consider whether marginal gains justify the switch.

Implementation Steps

1. Track exact time spent on every campaign-related activity during your trial period, from research to launch.

2. Calculate your average time savings per campaign by comparing trial activities against your documented baseline metrics.

3. Project monthly savings by multiplying per-campaign savings by your typical monthly campaign volume, then compare against subscription costs.

Pro Tips

Be honest about your campaign volume projections. If you only launch 3-4 campaigns monthly, even significant per-campaign time savings might not justify premium platform costs. Conversely, if you're managing dozens of campaigns across multiple clients, even modest per-campaign savings compound into substantial monthly value. Factor in not just your time but team member time if multiple people will use the platform.

7. Schedule a Decision-Making Review Session

The Challenge It Solves

Most trials expire while you're busy with other priorities. You intend to make a thoughtful evaluation, but suddenly it's the last day and you're making a snap decision based on incomplete information. Either you panic-purchase to avoid losing your setup work, or you let it expire and never revisit the evaluation—even if the platform could genuinely improve your workflow.

Without a structured decision process, your trial insights evaporate into vague impressions rather than actionable conclusions.

The Strategy Explained

Block 60-90 minutes on your calendar 2-3 days before trial expiration for a formal evaluation review. This isn't optional—treat it like a client meeting you can't reschedule. During this session, review your original evaluation scorecard, assess whether the platform addressed your documented pain points, and examine your calculated ROI numbers.

Prepare your outstanding questions before this session. If you're uncertain about specific features, scalability, or integration capabilities, compile those questions and reach out to support at least 48 hours before your review session. This timing ensures you get answers before making your final decision.

Involve decision stakeholders in this review if you're evaluating for team use. Having your manager, team members, or finance approver present ensures everyone's concerns are addressed and you're not making assumptions about budget or workflow priorities.

Implementation Steps

1. Calendar your evaluation review session when you start the trial, scheduling it 2-3 days before expiration to allow time for follow-up questions.

2. Create a decision document template during your first trial day, then populate it throughout the trial period with observations, metrics, and concerns.

3. Compile all outstanding questions 48 hours before your review session and send them to support, requesting responses before your scheduled evaluation time.

Pro Tips

Come to your review session with a clear decision framework: What would make this an immediate "yes"? What would make it a definite "no"? What factors would lead to "not now, but revisit in 6 months"? Having these criteria defined prevents emotional decision-making and ensures your choice aligns with strategic priorities rather than recency bias from your last interaction with the platform.

Putting It All Together

Your Facebook ad builder free trial is essentially a paid consulting engagement where you're both the consultant and the client. The platform is auditioning for a role in your workflow, and you're evaluating whether it deserves that role based on measurable performance rather than marketing promises.

The marketers who extract maximum value from trial periods share a common approach: they enter with documented problems, test with real workflows, and exit with quantified insights. They don't browse features aimlessly—they systematically validate whether the platform solves their specific pain points more effectively than their current process.

This strategic approach transforms trial periods from overwhelming feature tours into focused evaluation sprints. You're not trying to master every capability or explore every menu. You're answering one critical question: Does this platform deliver enough measurable value to justify its ongoing cost in my specific workflow?

The difference between a wasted trial and a confident purchase decision comes down to preparation and measurement. Document your baseline before you start. Test with real projects that reveal actual workflow friction. Calculate ROI using time-tracking data rather than hopeful estimates. Schedule your evaluation review before the trial pressure builds.

Remember that trial periods work both ways. Yes, you're evaluating the platform—but you're also discovering your own workflow inefficiencies and clarifying your actual needs versus assumed requirements. Sometimes the most valuable trial outcome isn't finding the perfect tool but gaining clarity about what you actually need to improve.

Ready to transform your advertising strategy? Start Free Trial With AdStellar AI and be among the first to launch and scale your ad campaigns 10× faster with our intelligent platform that automatically builds and tests winning ads based on real performance data.