Most marketers approach free trials backwards. They sign up with vague intentions to "check it out," click around aimlessly for a few days, then let the trial expire without any real sense of whether the platform could transform their workflow. The result? Missed opportunities to evaluate tools that could save dozens of hours each month.

Facebook ad campaign automation platforms represent a significant shift in how marketers approach advertising. These systems use AI to handle the repetitive, time-consuming tasks that drain your schedule—campaign structure, audience targeting, creative testing, budget allocation. But here's the challenge: you typically have 7-14 days to determine if this technology fits your specific needs.

The difference between a wasted trial and a transformative discovery comes down to strategy. You need a systematic approach that tests the platform's core capabilities against your actual workflow challenges. You need to push the automation to its limits, compare it against your manual processes, and calculate real ROI before your trial clock runs out.

These seven strategies turn your free trial into a comprehensive evaluation process. Each one addresses a specific aspect of automation—from initial setup to long-term scalability—giving you the data you need to make a confident purchasing decision.

1. Audit Your Current Campaign Pain Points Before Day One

The Challenge It Solves

Starting a trial without clear evaluation criteria is like shopping without a list—you'll be distracted by features you don't need while missing the ones that matter most. Many marketers activate their trial, explore random features, then struggle to articulate whether the platform actually solved their problems.

Without documented baseline metrics, you can't measure improvement. Without identified pain points, you can't test solutions. You need a framework that transforms your trial from casual browsing into targeted problem-solving.

The Strategy Explained

Before activating your free trial, spend 30-60 minutes documenting your current advertising workflow. Create a simple spreadsheet with three columns: Pain Point, Current Time Investment, and Evaluation Criteria.

List every frustration in your campaign building process. How long does it take to structure a new campaign? How many hours do you spend on audience research? How often do you manually duplicate ads for testing? Be specific with time estimates—these become your baseline for measuring automation benefits.

Then define what success looks like for each pain point. If campaign structuring currently takes 45 minutes, what time savings would justify the platform cost? If you struggle with creative testing consistency, what features would solve this? These criteria become your evaluation checklist throughout the trial.

Implementation Steps

1. Track your time for 2-3 days before starting the trial—note exactly how long each campaign task takes and where you feel most frustrated with manual processes.

2. Prioritize your top 3-5 pain points that, if solved, would significantly improve your advertising efficiency or results.

3. Write specific evaluation questions for each pain point: "Can this platform reduce campaign setup time to under 10 minutes?" or "Does the AI provide transparent reasoning for targeting recommendations?"

Pro Tips

Share your pain point document with the platform's support team when you start your trial. Many automation platforms offer guided onboarding that can be customized to address your specific challenges. This ensures you're testing the exact features that matter most to your workflow, rather than following a generic demo script.

2. Start With Your Best-Performing Historical Data

The Challenge It Solves

Testing automation with random or hypothetical campaigns tells you nothing about real-world performance. You need to see how the AI handles your actual advertising history—your proven winners, your target audiences, your brand voice. Generic test campaigns might look impressive in demos but fail when applied to your specific market.

The most valuable insight from any trial comes from watching the platform interact with your historical success patterns. Does it recognize what's worked before? Can it build on proven elements? Does it understand the nuances of your industry and audience?

The Strategy Explained

Begin your trial by importing or recreating 2-3 of your best-performing campaigns from the past 6-12 months. Choose campaigns with clear success metrics—high conversion rates, low cost per acquisition, strong engagement. These become your benchmark for evaluating the automation's capabilities.

With platforms like AdStellar AI, the system can analyze your Facebook Page history to identify top-performing creatives, headlines, and audience segments. This allows you to test whether the AI recognizes the same patterns you've identified manually. Watch how it structures campaigns around your proven elements.

The goal isn't just to recreate past success—it's to see if the automation can identify why certain elements performed well and apply those insights to new variations. Can it extract the winning formula from your historical data and scale it intelligently?

Implementation Steps

1. Export performance data from your 3 best campaigns, including creative assets, ad copy, targeting parameters, and key metrics like CTR, conversion rate, and CPA.

2. Connect the automation platform to your Facebook Ad Account and allow it to analyze your historical campaign data and Page performance.

3. Create a new campaign using the platform's AI builder and compare its recommendations against what you know worked—does it select similar audiences, creative styles, and messaging approaches?

Pro Tips

Document the AI's reasoning for its recommendations. Advanced platforms provide transparency about why they selected specific targeting parameters or creative elements. This rationale reveals whether the system truly understands your advertising strategy or is making generic suggestions. If the AI can articulate why your winning elements succeeded, it's more likely to replicate that success at scale.

3. Run a Head-to-Head Test Against Manual Campaign Building

The Challenge It Solves

Automation sounds impressive until you measure it against your actual workflow. Without direct comparison, you're left with vague impressions rather than concrete data. You might feel like the platform saves time, but can you quantify exactly how much? Does the automated approach actually outperform your manual process, or just feel more modern?

Many marketers skip this critical step and later regret their purchasing decision. They realize the automation doesn't actually save as much time as expected, or that the AI's recommendations aren't as strategic as their manual approach. A head-to-head test eliminates guesswork.

The Strategy Explained

Set aside 2-3 hours during your trial for a structured comparison test. Choose a campaign concept you need to launch—perhaps a product promotion or seasonal offer. Build it twice: once using your traditional manual process, once using the automation platform.

Time each approach precisely. For the manual build, track every step: research time, creative selection, ad copy writing, audience configuration, campaign structure setup. For the automated build, track the same elements—even if the platform handles them automatically, note how long the overall process takes.

Compare not just speed, but quality. Evaluate targeting sophistication, creative variety, campaign structure, and strategic thinking. Sometimes automation is faster but produces generic results. Sometimes it's only marginally faster but introduces strategic improvements you wouldn't have considered manually.

Implementation Steps

1. Select a campaign you genuinely need to launch during your trial period—this ensures realistic testing conditions and useful output regardless of which method you choose.

2. Build the campaign manually first, documenting your time at each stage: audience research (X minutes), creative selection (X minutes), copywriting (X minutes), structure setup (X minutes), review and launch (X minutes).

3. Build the same campaign using the automation platform, tracking the same time segments and noting where the AI handles tasks automatically versus where you still need manual input.

Pro Tips

Launch both versions if your budget allows—this gives you performance data in addition to time savings. Even a few days of results can reveal whether the automated campaign performs comparably to your manual approach. If the automation saves 40 minutes but produces campaigns that underperform by 20%, that's crucial information for your purchasing decision.

4. Push the Bulk Launch Capabilities to Their Limits

The Challenge It Solves

Single campaign automation is convenient, but the real power emerges when you need to scale. Can the platform handle launching 10 variations simultaneously? What about 50? Most trial users test one or two campaigns and never discover whether the system can handle their actual volume requirements.

Bulk capabilities separate basic automation from enterprise-grade solutions. If you run multiple products, serve different audience segments, or test extensively, you need to know the platform won't bottleneck your workflow when you're operating at full capacity.

The Strategy Explained

Dedicate one day of your trial to stress-testing the bulk launch features. Create a scenario that mirrors your peak operational needs—perhaps launching campaigns for multiple products, or testing numerous creative variations across different audience segments.

With platforms offering bulk capabilities, you can launch dozens of campaign variations in the time it would take to manually build one. Test whether the platform maintains quality at scale. Do the AI recommendations remain strategic when processing multiple campaigns simultaneously? Does the interface become sluggish or remain responsive?

Pay attention to the workflow efficiency. Can you review and adjust multiple campaigns quickly? Is there a unified dashboard that lets you compare performance across all variations? Bulk launching is only valuable if you can also manage and optimize at scale.

Implementation Steps

1. Create a list of 10-15 campaign variations you could realistically need—different products, audience segments, or creative approaches that represent your typical workload.

2. Use the platform's bulk launch features to build and launch all variations simultaneously, noting how long the process takes compared to launching them individually or manually.

3. Evaluate the management experience—can you easily monitor performance across all campaigns, identify winners, and pause underperformers without getting overwhelmed by data?

Pro Tips

Test the platform's campaign reuse capabilities during this exercise. Advanced systems like AdStellar AI's Winners Hub let you save proven campaign elements and quickly apply them to new launches. If you identify a winning combination during your bulk test, see how easily you can replicate it across other campaigns. This reusability often provides more long-term value than the initial bulk launch speed.

5. Evaluate the AI's Targeting and Budget Recommendations

The Challenge It Solves

Not all AI recommendations are created equal. Some platforms make generic suggestions based on broad industry data. Others provide strategic recommendations tailored to your specific performance history. The difference dramatically impacts your campaign results, but it's not always obvious during a casual trial.

Many marketers accept AI recommendations at face value without questioning the underlying logic. This works fine until you realize the platform is making suboptimal choices that hurt your performance. You need to understand whether the AI's strategic thinking matches or exceeds your manual expertise.

The Strategy Explained

Approach the AI's recommendations with healthy skepticism. When the platform suggests specific targeting parameters or budget allocations, dig into the reasoning. Advanced systems provide transparent explanations for their recommendations—why they selected certain audience attributes, how they calculated budget splits, what performance patterns influenced their decisions.

Compare the AI's suggestions against your manual strategy for the same campaign. Would you have made similar choices? If the recommendations differ significantly, evaluate whether the AI identified opportunities you missed or whether it's making generic suggestions that don't fit your specific situation.

Test the recommendations in practice. If the AI suggests targeting parameters you wouldn't normally use, launch a small test to see if the system identified a valuable audience segment. If budget recommendations differ from your standard approach, monitor whether the AI's allocation produces better results.

Implementation Steps

1. Create a campaign where you have strong opinions about the optimal targeting and budget approach—this gives you a clear comparison point for evaluating the AI's recommendations.

2. Review the platform's suggestions in detail, specifically looking for explanations of why it made each recommendation rather than just accepting the defaults.

3. Document cases where the AI's suggestions differ significantly from your approach, then test both versions if possible to see which performs better.

Pro Tips

Look for platforms that show their work. Systems with transparent AI reasoning help you learn from the recommendations even if you don't always follow them. AdStellar AI's approach of explaining the rationale behind each agent's decisions turns the platform into a learning tool, not just an automation shortcut. This transparency becomes especially valuable when training team members or justifying strategic choices to stakeholders.

6. Test the Learning Loop With Real Campaign Feedback

The Challenge It Solves

Static automation follows the same process every time. Intelligent automation improves based on results. The difference determines whether the platform becomes more valuable over time or remains a one-trick tool. Most trial periods are too short to see dramatic learning improvements, but you can test whether the feedback loop exists and functions properly.

Without a working learning loop, you're essentially buying a template system that automates your current approach. With effective learning, you're investing in a system that continuously refines its strategy based on what actually works for your specific campaigns and audiences.

The Strategy Explained

Launch 2-3 campaigns early in your trial period, then let them run for several days to generate performance data. The key is observing how the platform responds to this data. Does it identify winning elements and deprioritize underperformers? Can it explain what it learned from the results?

Create a second round of campaigns using the same parameters. Compare the AI's recommendations between the first and second rounds. Has it adjusted its approach based on actual performance? Does it now favor creative styles, messaging angles, or audience segments that succeeded in the initial campaigns?

The most sophisticated platforms maintain a library of proven elements and actively reference this data in future campaign builds. Test whether the system can quickly identify and reuse your top-performing creatives, headlines, and targeting combinations.

Implementation Steps

1. Launch your first automated campaigns within the first 2-3 days of your trial to maximize the time available for data collection and learning.

2. After 3-4 days of performance data, create new campaigns with similar objectives and note whether the platform's recommendations have evolved based on what succeeded previously.

3. Specifically test features designed for learning and reuse—like AdStellar AI's Winners Hub—to see if the platform makes it easy to identify and replicate proven campaign elements.

Pro Tips

Ask the platform's support team about their learning algorithms during your trial. Understanding how the system processes performance data helps you evaluate whether the learning loop matches your workflow. Some platforms require extensive data before showing improvements, while others adapt quickly even with limited campaign history. This information influences whether the platform will provide value immediately or only after months of use.

7. Calculate Your Projected ROI Before the Trial Ends

The Challenge It Solves

The final days of a trial often feel rushed. You've tested features, explored capabilities, but haven't quantified whether the platform justifies its cost. Without concrete ROI calculations, purchasing decisions become emotional rather than analytical. You might love the interface but can't justify the expense, or you might overlook significant time savings because you never measured them properly.

Many marketers end trials with vague impressions—"it seems helpful" or "it's pretty fast"—rather than data-driven conclusions. This ambiguity leads to delayed decisions, extended trials, or purchases you later regret. Clear ROI calculation transforms your trial conclusion from uncertain to obvious.

The Strategy Explained

In the final 2-3 days of your trial, create a comprehensive ROI analysis. Start with time savings—compare your documented baseline metrics from Strategy 1 against your trial experience. If campaign building previously took 45 minutes and now takes 5 minutes, that's 40 minutes saved per campaign. Multiply by your typical monthly campaign volume to calculate total time savings.

Translate time savings into dollar value. If you bill clients at $150/hour or your internal time is valued at $75/hour, those 40 minutes represent real cost savings. Calculate monthly and annual projections to see the full financial impact.

Beyond time savings, evaluate quality improvements. Did the AI identify targeting opportunities you would have missed? Did bulk launching enable testing at a scale previously impossible? These strategic benefits are harder to quantify but often provide more value than pure time savings.

Implementation Steps

1. Create a simple ROI spreadsheet with columns for: Task, Manual Time, Automated Time, Time Savings, Your Hourly Rate, Monthly Value, Annual Value.

2. Fill in actual data from your trial experience—use the time tracking from your head-to-head test and extrapolate based on your typical campaign volume.

3. Add qualitative benefits that influence ROI but aren't purely time-based: improved targeting precision, increased testing volume, reduced mental fatigue, team scalability, and strategic insights.

Pro Tips

Include opportunity cost in your ROI calculation. Time saved on campaign building can be redirected to strategy development, creative refinement, or client acquisition. If automation frees up 10 hours per month, what's the value of applying those hours to higher-leverage activities? This opportunity cost often exceeds the direct time savings value and provides the strongest justification for automation investment.

Putting It All Together

The difference between a productive free trial and a wasted opportunity comes down to intentionality. Most marketers activate their trial with vague curiosity and end it with vague impressions. You now have a systematic framework that transforms those two weeks into a comprehensive evaluation process.

Start before you start—document your pain points, time investments, and evaluation criteria before activating the trial. This baseline becomes your measuring stick for everything that follows. Then work through each strategy methodically: test with your best historical data, run direct comparisons, push the bulk capabilities, evaluate AI quality, observe the learning loop, and calculate concrete ROI.

By the final day of your trial, you'll have specific answers to specific questions. Does this platform solve my documented pain points? Does it save enough time to justify the cost? Do the AI recommendations match or exceed my manual strategy? Can it scale with my growing needs? These aren't subjective impressions—they're data-driven conclusions based on systematic testing.

The trial period isn't just about evaluating the platform. It's about envisioning your workflow six months from now. Will you still be spending hours on manual campaign builds, or will you redirect that time to strategy and growth? Will you still be limited by your capacity to test variations, or will you launch campaigns at scale? The answer determines whether automation becomes a transformative investment or just another tool gathering digital dust.

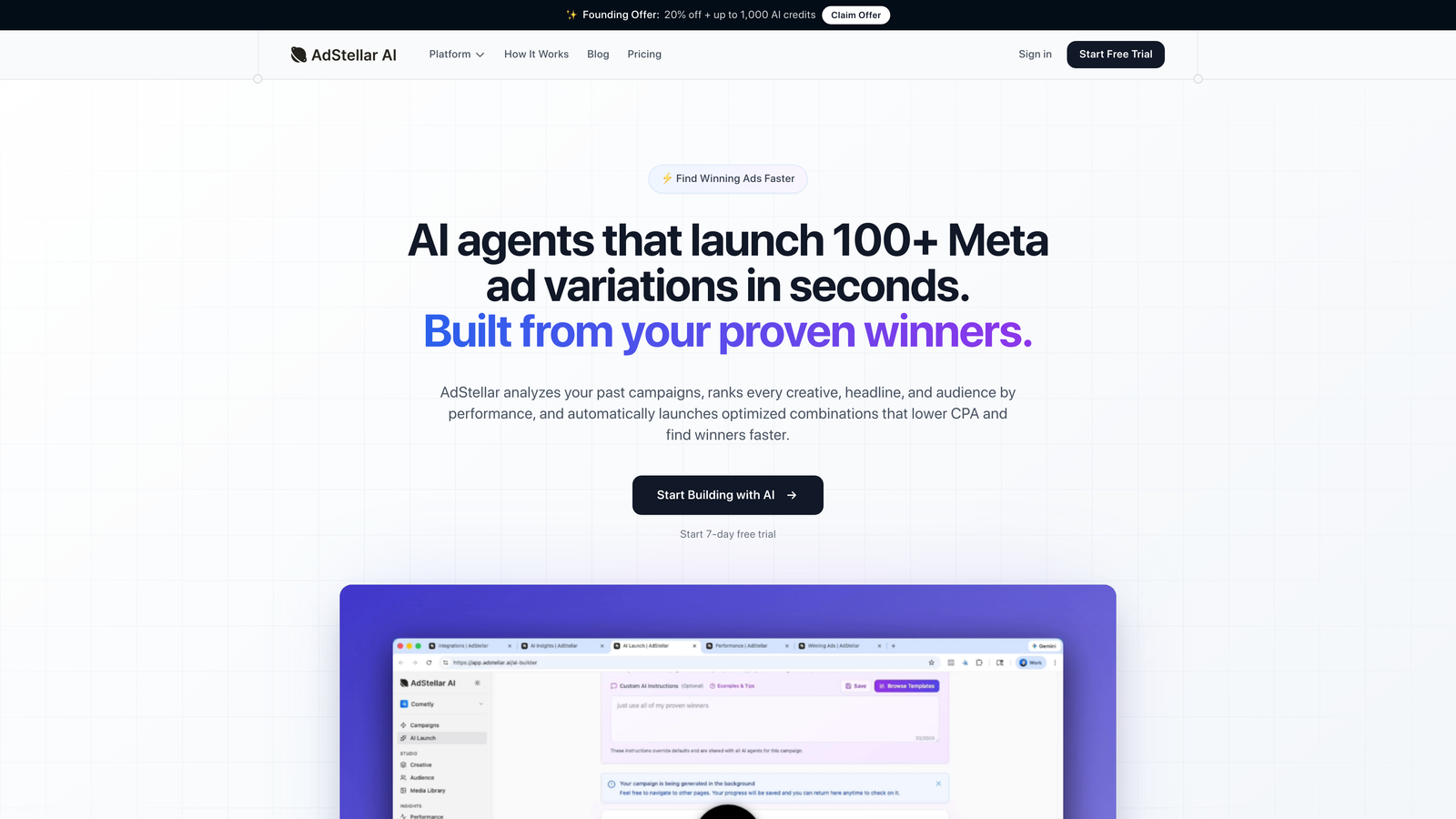

Ready to transform your advertising strategy? Start Free Trial With AdStellar AI and be among the first to launch and scale your ad campaigns 10× faster with our intelligent platform that automatically builds and tests winning ads based on real performance data.