Your Meta ads are running. Budget is flowing. But here's the uncomfortable truth: most of that spend is probably working against you.

The difference between mediocre and exceptional Meta advertising rarely comes down to creative genius or targeting wizardry. It comes down to something far more fundamental: how you allocate your budget across campaigns, ad sets, and individual ads.

Think about it. You could have the perfect audience and compelling creative, but if your budget is spread too thin across underperformers while your winners sit starved for fuel, you're leaving serious money on the table. The math is brutal: a 2% conversion rate with proper budget allocation beats a 3% conversion rate with poor allocation every single time.

The challenge? Meta's platform gives you dozens of ways to structure and fund your campaigns. Campaign Budget Optimization versus ad set budgets. Manual bidding versus automatic. Daily caps versus lifetime budgets. Each decision cascades into real dollars won or lost.

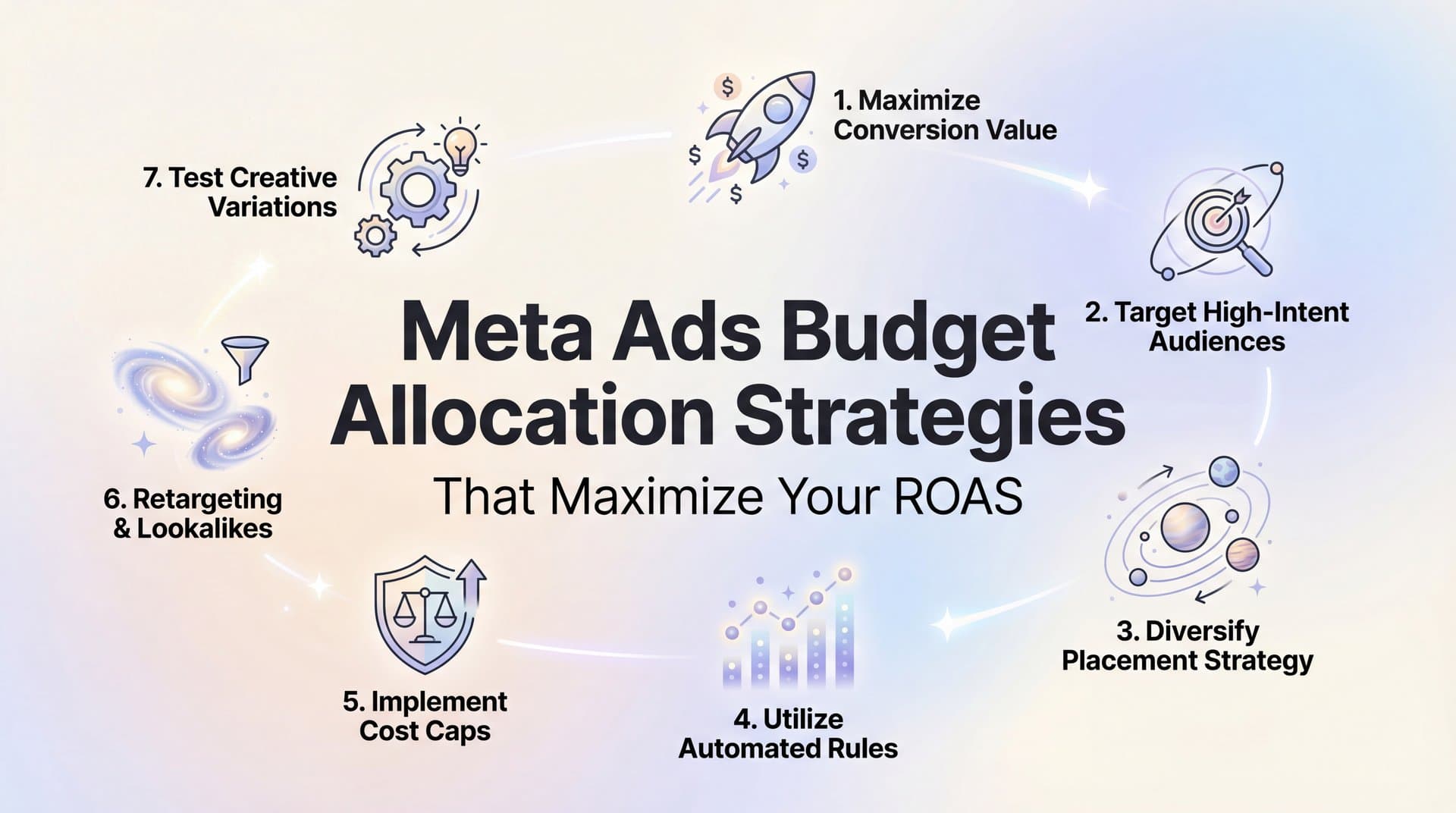

What follows are seven budget allocation strategies that work together as a system. These aren't isolated tactics you cherry-pick. They're interconnected approaches that compound when implemented thoughtfully. Some focus on campaign structure decisions. Others leverage AI-powered optimization. All of them share one goal: extracting maximum return from every dollar you invest in Meta advertising.

Let's break down exactly how to allocate your Meta ads budget like the top 1% of advertisers do.

1. The 70-20-10 Budget Framework

The Challenge It Solves

Most advertisers fall into one of two traps. Either they play it too safe, endlessly pumping money into the same campaigns while performance slowly decays. Or they swing too aggressive, constantly chasing shiny new strategies that burn through budget before proving themselves.

Both approaches fail because they lack structure. Without a systematic framework for balancing proven performance against necessary innovation, you're essentially gambling with your ad spend. The 70-20-10 framework solves this by creating clear guardrails for how much budget goes where.

The Strategy Explained

The 70-20-10 framework divides your total Meta advertising budget into three distinct buckets, each serving a specific purpose in your overall strategy.

Seventy percent of your budget flows to proven performers. These are campaigns, ad sets, and individual ads that have consistently delivered your target ROAS over at least a 30-day window. This is your bread and butter, your reliable revenue engine. These campaigns have exited Meta's learning phase and demonstrate stable performance.

Twenty percent goes to scaling tests. These are variations of your proven performers where you're testing one significant change at a time. Maybe you're expanding to a new audience segment similar to your winners. Or testing a new creative format that maintains your core messaging. The key: these builds on what's working rather than starting from scratch.

Ten percent funds true experiments. This is your innovation budget for testing completely new approaches, audiences you haven't touched, or creative concepts that break from your established patterns. Most of these will fail. That's the point. This controlled experimentation prevents stagnation without risking your core performance.

Implementation Steps

1. Calculate your total monthly Meta advertising budget and multiply by 0.7, 0.2, and 0.1 to get your three allocation amounts.

2. Audit your current campaigns and categorize each into proven performer, scaling test, or experiment based on performance history and how different they are from your winners.

3. Reallocate budgets to match your 70-20-10 targets, using a two-week transition period rather than making drastic overnight changes that could disrupt the learning phase.

4. Set calendar reminders to review and rebalance monthly, promoting successful scaling tests to the proven performer bucket and graduating experiments that show promise to scaling tests.

Pro Tips

During Q4 or other high-stakes periods, consider shifting to 80-15-5 to maximize reliable revenue. Conversely, if you're seeing performance plateau across your proven performers, temporarily flip to 60-25-15 to accelerate your search for the next winning approach. The framework is a starting point, not a straitjacket.

2. CBO vs. Ad Set Budget Selection

The Challenge It Solves

One of the most consequential decisions you'll make is whether to use Campaign Budget Optimization or manage budgets at the ad set level. Get this wrong and you'll either watch Meta pour money into a single ad set while ignoring others, or you'll manually manage dozens of budgets like a part-time accountant.

The confusion stems from Meta's own messaging. They heavily promote CBO as the superior option, but the reality is more nuanced. CBO works brilliantly in some scenarios and catastrophically in others.

The Strategy Explained

Campaign Budget Optimization uses machine learning to distribute your budget across ad sets in real-time based on performance signals. You set one budget at the campaign level, and Meta's algorithm decides how much each ad set receives based on where it predicts the best results.

The magic of CBO: when you have similar ad sets competing for the same objective, Meta can shift budget toward whatever's performing best on any given day. This works exceptionally well when you're testing variations of the same core offer to similar audiences.

The limitation of CBO: Meta's algorithm optimizes for volume of conversions, not necessarily your business priorities. If you're running ad sets with vastly different purposes—say, cold prospecting versus retargeting—CBO will often starve your prospecting budget to feed the easier retargeting conversions.

Ad set budgets give you manual control. You decide exactly how much each ad set can spend. This approach shines when you need to enforce specific budget allocations across different funnel stages or audience types that shouldn't compete for the same dollars.

Implementation Steps

1. Map out your campaign structure and identify which ad sets serve fundamentally different purposes versus which are variations testing similar approaches.

2. Use CBO for campaigns where ad sets are testing similar audiences or creatives with the same objective, allowing Meta to find the best performers automatically.

3. Use ad set budgets when you're running different funnel stages in one campaign, testing drastically different audience types, or need to maintain minimum spend levels for specific segments.

4. Set minimum and maximum spend limits on individual ad sets within CBO campaigns to prevent the algorithm from going to extremes—this gives you guardrails while maintaining automation benefits.

Pro Tips

A hybrid approach often works best: use CBO for your scaling tests and experiments where you want Meta to identify winners quickly, but use ad set budgets for your proven performers where you've already identified the optimal allocation. This combines automation's speed with manual control's precision.

3. Performance-Based ROAS Thresholds

The Challenge It Solves

Budget allocation becomes meaningless if you're not tying it to actual performance outcomes. Too many advertisers set budgets based on arbitrary numbers or gut feelings rather than the returns each campaign is actually generating.

The result? Campaigns that barely break even continue consuming budget while high-performers that could scale profitably sit capped at conservative spending levels. You need a systematic way to increase investment in what's working and cut what's not.

The Strategy Explained

Performance-based ROAS thresholds create clear rules for when to increase, maintain, or decrease budget allocation based on return on ad spend. This transforms budget management from subjective decision-making into objective, data-driven action.

Start by defining your ROAS tiers. A typical framework might look like this: campaigns hitting 4× ROAS or higher qualify for aggressive scaling. Those between 2.5× and 4× maintain current budget levels. Anything between 1.5× and 2.5× enters observation mode with reduced budget. Below 1.5× gets paused or dramatically restructured.

The specific thresholds depend entirely on your business economics. A business with 60% margins can be more aggressive than one with 20% margins. The key is establishing clear breakpoints where you know exactly what action to take based on performance data.

This approach works because it removes emotion from budget decisions. When a campaign you personally love drops below your threshold, the data tells you to cut it. When a campaign you're skeptical about crushes your top threshold, the data tells you to scale it regardless of your feelings.

Implementation Steps

1. Calculate your true break-even ROAS by dividing your product price by your cost of goods sold plus all marketing overhead, then add your minimum acceptable profit margin.

2. Define four ROAS tiers: aggressive scaling threshold, maintain threshold, observation threshold, and pause threshold, spacing them based on your business model and risk tolerance.

3. Set weekly review cycles where you pull ROAS data for each campaign over a 7-day window and categorize them into your defined tiers.

4. Implement your reallocation rules: increase budget 25-50% for aggressive scaling tier, hold steady for maintain tier, reduce by 30-50% for observation tier, and pause anything below your bottom threshold.

Pro Tips

Build in stabilization periods. Don't make budget changes based on a single day's data or during the learning phase. Meta's official guidelines indicate that ad sets typically need approximately 50 optimization events per week to exit learning and stabilize performance. Wait for that stability before applying your ROAS thresholds aggressively.

4. Dayparting and Schedule-Based Pacing

The Challenge It Solves

Your audience doesn't convert uniformly throughout the day or week. Yet many advertisers spread their budget evenly across all hours, effectively overspending during low-conversion windows while underspending when their audience is most ready to buy.

This matters more than you might think. If your conversion rate is 3% during peak hours but only 0.8% during off-hours, you're potentially wasting a third of your budget on the wrong time slots. Dayparting fixes this by concentrating spend when it matters most.

The Strategy Explained

Dayparting allocates more budget to specific time windows when your audience demonstrates higher conversion rates or lower cost per acquisition. Instead of letting Meta spend your daily budget evenly from midnight to midnight, you weight it toward your proven high-performance hours.

The approach requires analyzing your historical performance data. Meta's Ads Manager includes a breakdown by time of day that shows exactly when your conversions happen and what they cost. Look for patterns across at least 30 days of data to identify your consistently strong time windows.

Many businesses discover that their audience converts best during specific periods. E-commerce brands often see spikes during lunch breaks and evening browsing sessions. B2B companies might find Tuesday through Thursday mornings outperform everything else. Service businesses frequently see weekend patterns completely different from weekdays.

Once you've identified your peak windows, you can implement dayparting through ad scheduling rules or by adjusting bid strategies during specific hours. The goal isn't to eliminate spending during off-hours entirely—you still need some presence for testing and brand awareness—but to concentrate your budget where it generates the best returns.

Implementation Steps

1. Navigate to your Ads Manager and select your best-performing campaigns from the past 60 days, then click 'Breakdown' and choose 'By Time' to see hourly performance patterns.

2. Export this data and calculate your average cost per conversion and conversion rate for each hour of the day, identifying the top 25% of hours that deliver your best efficiency.

3. Create custom ad schedules that increase your bid caps or daily budgets by 30-50% during your peak hours while reducing them by 20-30% during your lowest-performing windows.

4. Monitor for two weeks and adjust your time windows based on whether the concentrated budget improved overall campaign efficiency or if you hit saturation during peak hours.

Pro Tips

Dayparting effectiveness varies significantly by industry and audience. Don't assume your patterns match industry averages. Always analyze your own data. Also consider that dayparting works best with sufficient daily budget—if you're only spending $50 per day, the administrative overhead might outweigh the efficiency gains.

5. Funnel-Stage Budget Weighting

The Challenge It Solves

Most advertisers obsess over bottom-of-funnel conversion campaigns while starving their top-of-funnel awareness efforts. The short-term logic makes sense—conversion campaigns generate immediate revenue. But this creates a slow-motion disaster as your prospect pool shrinks and your cost per acquisition creeps upward.

The opposite mistake is equally common: brands dump huge budgets into awareness campaigns that build massive reach but never convert that attention into customers. Without proper budget weighting across funnel stages, you're either building a leaky bucket or running a bucket with no water flowing in.

The Strategy Explained

Funnel-stage budget weighting allocates proportional budget across awareness, consideration, and conversion campaigns based on your business model and current growth phase. This ensures you're simultaneously filling your funnel with new prospects while nurturing and converting them efficiently.

A typical mature business might allocate 30% to awareness campaigns targeting cold audiences, 20% to consideration campaigns engaging warm audiences who've interacted but not converted, and 50% to conversion campaigns focused on closing ready buyers. These ratios shift dramatically based on your situation.

Early-stage businesses often need to weight heavier toward awareness—perhaps 50-30-20—to build their initial prospect pool. Brands with long sales cycles might push more budget to consideration campaigns that nurture over time. Businesses with strong organic traffic can often reduce awareness spend and concentrate on conversion campaigns.

The key is recognizing that each funnel stage feeds the next. Your conversion campaigns can only work with the audience your awareness campaigns build. Your consideration campaigns depend on both. This interconnection means you can't optimize one stage in isolation without affecting the others.

Implementation Steps

1. Audit your current campaigns and categorize each into awareness (cold audiences, no prior engagement), consideration (engaged audiences, website visitors, content consumers), or conversion (retargeting, cart abandoners, high-intent audiences).

2. Calculate what percentage of your total budget currently goes to each funnel stage and compare it to your business needs—are you starving top-of-funnel while your prospect pool shrinks?

3. Define your target allocation ratios based on your business stage, typical customer journey length, and whether you're prioritizing growth or efficiency, then create a 30-day transition plan to reach those targets.

4. Track leading indicators for each stage: reach and engagement for awareness, click-through rates and landing page actions for consideration, conversion rate and ROAS for conversion campaigns, adjusting allocations quarterly based on how each stage performs.

Pro Tips

Full-funnel attribution remains challenging. Meta's attribution settings allow 1-day, 7-day, and 28-day click windows plus 1-day view-through, but these don't capture the full customer journey. Consider using incrementality testing or marketing mix modeling to understand how your awareness spend impacts downstream conversions, even when Meta's pixel doesn't connect the dots.

6. Creative Testing Isolation Method

The Challenge It Solves

Creative testing is where most budget allocation strategies fall apart. Advertisers either test too many variables simultaneously, making it impossible to identify what actually worked, or they test creatives within their main campaigns, letting unproven ads burn through budget meant for proven performers.

The result is expensive confusion. You can't scale confidently because you don't know which creative elements drive results. Your main campaigns suffer inconsistent performance as new creatives drag down overall metrics. Testing becomes a liability rather than your path to better performance.

The Strategy Explained

The Creative Testing Isolation Method dedicates a separate testing budget with controlled variables before scaling winning creatives to your main campaigns. This approach treats creative testing as its own distinct operation rather than something you bolt onto existing campaigns.

Here's how it works: you create dedicated testing campaigns that hold everything constant except the creative variable you're evaluating. Same audience. Same placement. Same optimization goal. Only the creative changes. This isolation lets you attribute performance differences directly to the creative rather than wondering if audience or placement shifts caused the results.

Your testing budget should be modest—typically 5-10% of your total spend. The goal isn't to generate massive revenue from testing campaigns. It's to identify winners efficiently so you can scale them with confidence in your main campaigns. Think of this as your research and development budget.

Once a creative proves itself in your testing environment by hitting your predetermined success threshold, you graduate it to your proven performer campaigns where it receives significantly more budget. Creatives that underperform get killed quickly before they waste serious money.

Implementation Steps

1. Create a separate testing campaign structure with ad sets that mirror your best-performing main campaign's settings but with a dedicated testing budget that's 5-10% of that main campaign's spend.

2. Define your creative testing variables clearly—test one element at a time such as video versus static image, different hooks in the first three seconds, or varying calls-to-action, keeping everything else identical.

3. Set clear graduation criteria before launching tests, such as achieving your target ROAS over at least 100 conversions or beating your control creative by 20% on your primary metric.

4. Run tests for a minimum of 7 days to account for day-of-week variations, then move winners to your main campaigns while archiving losers with notes on what didn't work to avoid retesting failed approaches.

Pro Tips

Many advertisers report improved efficiency when using structured testing frameworks rather than ad-hoc creative changes. Build a testing calendar that spaces out your experiments so you're not trying to evaluate five different creative tests simultaneously. Sequential testing with clear success criteria beats chaotic everything-at-once approaches every time.

7. AI-Powered Dynamic Allocation

The Challenge It Solves

Everything we've covered so far requires manual monitoring and adjustment. You need to check performance data, calculate ROAS thresholds, reallocate budgets, and adjust bids. Even with clear frameworks, this consumes hours every week. Miss a day of monitoring and you might burn through budget on a campaign that suddenly stopped performing.

Human monitoring also introduces lag. By the time you notice a performance shift and react, you've already wasted budget or missed scaling opportunities. AI-powered optimization solves this by continuously analyzing performance signals and adjusting allocation in real-time without human intervention.

The Strategy Explained

AI-powered dynamic allocation uses machine learning to automatically shift budget between campaigns, ad sets, and ads based on real-time performance analysis. Instead of weekly or daily manual reviews, the system monitors every impression and adjusts allocation continuously.

The sophistication here goes beyond Meta's built-in Campaign Budget Optimization. Advanced AI systems analyze patterns across your entire account history, identifying which creative elements, audience combinations, and campaign structures have historically performed best. They then use these insights to make allocation decisions that consider not just current performance but predicted future performance.

These systems can process signals humans miss. They notice that certain creatives perform better with specific audience segments. They identify time-of-day patterns that vary by campaign. They detect early warning signs that a campaign is about to enter fatigue before your metrics obviously deteriorate.

The result is budget that flows automatically toward your best opportunities while pulling back from underperformers faster than any human could manage manually. Budget pacing strategies help prevent overspend during low-conversion periods while ensuring you maximize investment during high-performance windows.

Implementation Steps

1. Evaluate AI-powered optimization platforms that integrate with Meta's API and offer automated budget allocation features, prioritizing those that provide transparency into their decision-making rather than black-box automation.

2. Start with a controlled test using 20-30% of your total Meta budget, allowing the AI system to manage allocation for this subset while you maintain manual control over the rest to compare performance.

3. Define your optimization goals clearly within the AI system—whether you're prioritizing ROAS, cost per acquisition, conversion volume, or a custom metric based on your business model.

4. Monitor the AI's decisions for the first month to understand its logic and build confidence, then gradually expand the percentage of budget under AI management as you validate improved performance versus your manual approach.

Pro Tips

AI-powered optimization tools can process performance data faster than manual monitoring allows, but they still need quality input data to make good decisions. Ensure your conversion tracking is accurate and you have sufficient conversion volume—these systems work best with at least 50-100 conversions per week to identify meaningful patterns. Starting AI optimization with insufficient data leads to unstable recommendations.

Putting It All Together

Budget allocation isn't a set-it-and-forget-it decision. It's an ongoing optimization process that compounds your results when done systematically.

Start with the 70-20-10 framework as your foundation. This gives you the structure to balance proven performance against necessary innovation without gambling your entire budget on unproven approaches. Get this framework in place first, even if you implement nothing else.

Next, make your CBO versus ad set budget decisions strategically. Use Campaign Budget Optimization for campaigns where you're testing similar variations and want Meta to find winners quickly. Use ad set budgets when you need to enforce specific allocations across different funnel stages or audience types. Don't default to one approach for everything.

Layer in your performance-based ROAS thresholds to create objective rules for when to scale, maintain, or cut budget. This removes emotion from decisions and ensures your money flows toward what's actually working based on data rather than hope.

As you gather more data, add dayparting to concentrate spend during your proven high-conversion windows. Simultaneously implement funnel-stage budget weighting to ensure you're building pipeline while converting it. These two strategies work together to optimize both when and where your budget flows.

Create your creative testing isolation structure to systematically identify winning ads before scaling them. This prevents unproven creatives from dragging down your main campaign performance while giving you a reliable pipeline of validated ads to scale.

Finally, consider AI-powered tools to automate the ongoing monitoring and adjustment these strategies require. The right automation doesn't replace strategic thinking—it executes your strategy faster and more consistently than manual management allows.

The key principle underlying all of these strategies: budget allocation requires continuous monitoring and adjustment based on performance data. Markets shift. Audience behavior changes. Creative fatigue sets in. What worked last month might not work this month. The frameworks we've covered give you the structure to adapt systematically rather than reactively.

Ready to transform your advertising strategy? Start Free Trial With AdStellar AI and be among the first to launch and scale your ad campaigns 10× faster with our intelligent platform that automatically builds and tests winning ads based on real performance data. Our AI analyzes your top-performing creatives, headlines, and audiences—then builds, tests, and launches new ad variations for you at scale, handling the complex budget allocation decisions we've covered while you focus on strategy.