You just spent $10,000 on Meta ads last month. Your dashboard shows 2.3 million impressions, 47,000 clicks, and a 2.1% CTR. But here's the question keeping you up at night: Did those ads actually work?

If you're like most media buyers, you're drowning in metrics but starving for insights. Your advertising platforms give you dozens of data points—CPM, CPC, CTR, frequency, relevance scores, engagement rates, video completion percentages, landing page views, and on and on. Yet when your boss asks "Are these ads profitable?" or "Should we scale this campaign?", you find yourself staring at spreadsheets trying to piece together an answer.

Here's the uncomfortable truth: most advertisers can tell you what happened, but not whether it worked.

The problem isn't lack of data. It's that traditional dashboards show activity without context. They tell you 47,000 people clicked your ad, but not whether those clicks turned into customers. They show you spent $10,000, but not whether you made $8,000 or $18,000 back. Numbers without business context are just noise.

This creates real consequences. Wasted budget on campaigns that look good on paper but don't drive revenue. Missed opportunities to scale winners because you can't confidently identify what's working. Hours spent pulling reports from multiple platforms instead of optimizing campaigns. And perhaps worst of all—the inability to prove ROI to stakeholders who control your budget.

Think of it like checking your bank balance without knowing your bills. Sure, you see numbers, but you have no idea if you're ahead or behind. That's what measuring ad effectiveness feels like without a systematic framework.

But here's what changes everything: the best media buyers don't track more metrics—they track the right metrics with ruthless focus. They've built measurement systems that reveal winning patterns, not just vanity numbers. They know their 3-5 critical metrics cold, and they can tell you within seconds whether a campaign is working or needs adjustment.

That's exactly what this guide will teach you to build. Not another overwhelming list of metrics to track, but a systematic framework for identifying what actually matters for your specific goals. You'll learn how to cut through the noise, establish measurement infrastructure that works automatically, and create visibility into the patterns that drive real business results.

By the end, you'll know exactly which metrics reveal whether your ads are profitable, how to set up tracking that eliminates manual reporting, and how to use measurement data to make confident scaling decisions. More importantly, you'll understand how to build this system once and benefit from it forever—turning measurement from a time-consuming burden into a competitive advantage.

This isn't about tracking everything. It's about tracking smarter. Let's walk through how to build a measurement system that actually tells you whether your ads are working—and what to do about it.

Step 1: Define Your North Star Metrics (The 3-5 Numbers That Actually Matter)

Before you can measure whether your ads work, you need to define what "working" means for your specific business. This sounds obvious, but most advertisers skip this step and end up tracking everything, which means they're effectively tracking nothing.

Your North Star metrics are the 3-5 numbers that directly connect ad spend to business outcomes. Not vanity metrics like impressions or reach. Not intermediate metrics like CTR or engagement rate. The metrics that answer the fundamental question: "Did we make more money than we spent?"

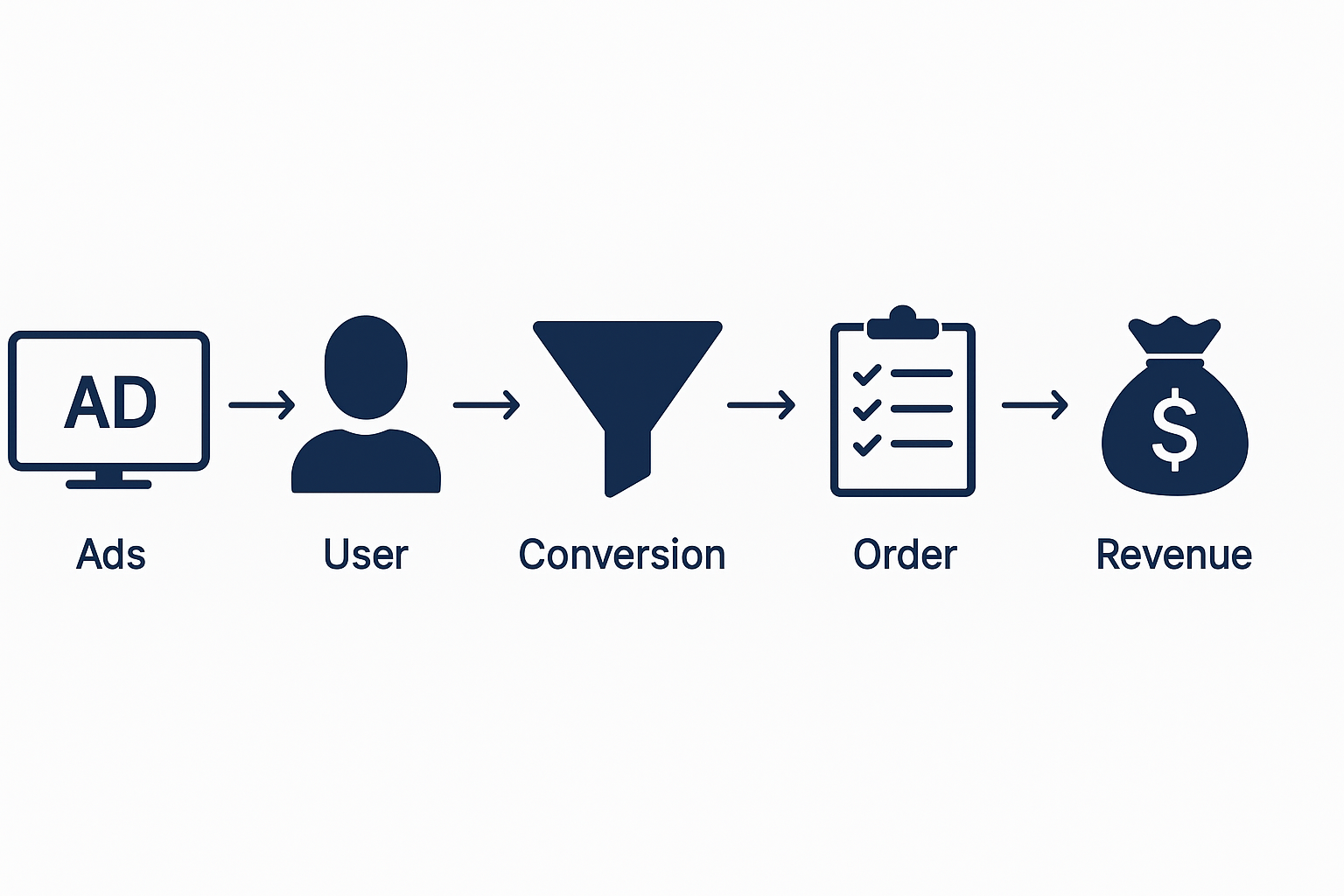

Here's how to identify yours. Start by mapping your customer journey from ad impression to revenue. For most businesses, this looks something like: Ad View → Click → Landing Page → Lead/Sign-up → Qualified Lead → Customer → Revenue. Your North Star metrics should measure the critical conversion points in this journey, plus the economic outcome.

For e-commerce businesses, your core metrics typically include: Cost Per Purchase (how much you spend to acquire a customer), Return on Ad Spend or ROAS (revenue generated per dollar spent), Customer Acquisition Cost or CAC (total cost to acquire including all touchpoints), and Average Order Value or AOV (revenue per transaction). These four numbers tell you everything you need to know about profitability.

For lead generation businesses, focus on: Cost Per Lead (what you pay for each contact), Lead-to-Customer Conversion Rate (what percentage of leads become buyers), Customer Lifetime Value or LTV (total revenue per customer over time), and CAC to LTV Ratio (whether acquisition costs are sustainable). If your CAC is $500 but your LTV is $2,000, you have a profitable model. If it's reversed, you're burning money.

For SaaS and subscription businesses, track: Cost Per Trial/Sign-up (acquisition cost for free users), Trial-to-Paid Conversion Rate (activation success), Monthly Recurring Revenue or MRR per cohort (revenue trajectory), and Payback Period (how long until you recover acquisition costs). Understanding when you break even on a customer determines how aggressively you can scale, which is why learning how to create effective ad strategies becomes essential for sustainable growth.

The key is ruthless focus. If you're tracking 15 metrics, you're not really tracking anything—you're just collecting data. Pick the 3-5 that directly measure profitability for your business model, and make those your obsession.

Here's a practical exercise: Write down every metric you currently track. Now cross out anything that doesn't directly connect to revenue or profitability. Cross out anything you can't take action on. Cross out anything that's just interesting but not critical. What's left? Those are your North Star metrics.

Once you've identified them, set clear thresholds for each. What's your target Cost Per Purchase? What ROAS makes a campaign profitable? What conversion rate indicates a winning funnel? These thresholds become your decision-making framework. When metrics are above threshold, you scale. When they're below, you optimize or kill the campaign.

Document these metrics and thresholds in a simple one-page document. Share it with your team. Reference it in every campaign review. This becomes your measurement constitution—the standard against which every ad, every campaign, every creative decision is evaluated.

Most importantly, resist the temptation to add more metrics over time. The power of North Star metrics comes from focus, not comprehensiveness. You want a dashboard you can glance at for 30 seconds and know exactly whether your advertising is working. That only happens when you've eliminated everything that doesn't matter.

Step 2: Establish Your Baseline (Know Where You're Starting From)

You can't measure improvement without knowing your starting point. Yet most advertisers launch campaigns, make changes, and have no idea whether things got better or worse because they never established a baseline.

Your baseline is your performance benchmark before optimization. It's the control group against which you measure every change. Without it, you're flying blind—making decisions based on gut feel rather than data.

Start by running a baseline measurement period. This should be 7-14 days of consistent ad delivery with no major changes. You're not trying to optimize during this period. You're simply collecting clean data on your current performance across your North Star metrics.

During this baseline period, keep everything stable. Don't change budgets. Don't swap creatives. Don't adjust targeting. Don't modify bids. The goal is to understand your natural performance level without interference. Think of it like taking your temperature before starting medication—you need to know normal before you can measure improvement.

Document your baseline metrics in a simple table. For each North Star metric, record: the metric name, the baseline value, the date range measured, and the sample size (impressions, clicks, conversions). For example: "Cost Per Purchase: $47.23, measured Nov 1-14, 2024, across 847 purchases." This becomes your reference point.

But here's what most advertisers miss: you need separate baselines for different segments. Your baseline performance will vary by platform (Meta vs. Google), audience (cold vs. warm), creative format (video vs. static), and campaign objective (awareness vs. conversion). Lumping everything together creates a meaningless average.

Create segment-specific baselines for your key variables. What's your baseline Cost Per Purchase for cold traffic on Meta? For retargeting on Google? For video ads vs. carousel ads? These segment baselines reveal where you have the most opportunity for improvement.

Pay special attention to variance in your baseline data. If your Cost Per Purchase ranges from $30 to $80 across the baseline period, that high variance tells you something important: your results are unstable, which means you have significant optimization opportunity. Low variance (consistent performance) suggests you're closer to your efficiency ceiling.

Also establish baseline performance by day of week and time of day. Many businesses see dramatic performance differences based on timing. E-commerce might convert better on weekends. B2B might perform better Tuesday-Thursday. Understanding these patterns in your baseline prevents you from mistaking natural fluctuation for campaign performance.

Once you have your baseline, calculate your improvement targets. A realistic optimization goal is 15-30% improvement in your primary metric over 30-60 days. If your baseline Cost Per Purchase is $50, your target might be $40-42.50. These targets give you clear success criteria for your optimization efforts, and understanding how to improve ad engagement can help you achieve those targets more consistently.

Document everything in a baseline report that includes: date range, sample size, metric values, segment breakdowns, variance analysis, and improvement targets. This report becomes your measurement foundation. Every future campaign review should reference back to this baseline to show progress.

Update your baseline quarterly or after major changes (new product, new market, major platform updates). Your baseline isn't static—it should evolve as your business and market conditions change. But within any given optimization cycle, your baseline stays fixed as your comparison point.

Step 3: Implement Full-Funnel Tracking (Connect Ads to Revenue)

Here's where most measurement systems break down: they track ad platform metrics (clicks, impressions, CTR) but lose visibility once users leave the platform. You can see that 1,000 people clicked your ad, but you have no idea if any of them became customers.

Full-funnel tracking solves this by connecting every stage of your customer journey—from ad impression to final purchase—in a single view. This is what separates advertisers who know their ads work from those who just hope they do.

The foundation of full-funnel tracking is proper conversion tracking setup. This means implementing tracking pixels, conversion APIs, and analytics tools that follow users from ad click through conversion. For Meta, that's the Meta Pixel and Conversions API. For Google, it's Google Ads conversion tracking and Google Analytics 4. For most businesses, you need both.

Start with pixel implementation. Install your tracking pixels on every page of your website—landing pages, product pages, checkout pages, thank you pages. The pixel fires when users take actions, sending data back to your ad platforms. This is what allows platforms to optimize for conversions and report on performance.

But pixels alone aren't enough anymore. iOS privacy changes and cookie restrictions mean pixel tracking misses 30-50% of conversions. That's why you need Conversions API (CAPI) implementation. CAPI sends conversion data directly from your server to ad platforms, bypassing browser restrictions. The combination of pixel + CAPI gives you the most complete tracking possible.

Set up conversion events for every meaningful action in your funnel. For e-commerce: ViewContent (product page views), AddToCart, InitiateCheckout, Purchase. For lead gen: ViewContent, Lead (form submission), Schedule (appointment booked), Purchase (customer conversion). For SaaS: ViewContent, StartTrial, Subscribe, Purchase. Each event reveals where users progress or drop off.

Implement value tracking for your conversion events. Don't just track that a purchase happened—track the purchase amount. This allows platforms to optimize for high-value conversions and gives you accurate ROAS data. Pass dynamic values using your e-commerce platform or CRM data.

Connect your ad platforms to your CRM or analytics system. This is where full-funnel tracking becomes powerful. When you can see that the $1,000 you spent on Meta ads generated 20 leads, and 5 of those leads became customers worth $10,000, you have complete visibility. Tools like Google Analytics 4, HubSpot, Salesforce, or custom data warehouses enable this connection.

Set up UTM parameters for all your ad campaigns. UTMs are tags added to your URLs that track traffic sources in analytics. Use consistent naming conventions: utmsource (platform), utmmedium (ad type), utmcampaign (campaign name), utmcontent (ad variation). This allows you to track performance in your analytics system even when platform tracking fails.

Implement cross-device tracking where possible. Users often see ads on mobile but convert on desktop, or vice versa. Platforms like Meta and Google offer cross-device tracking through logged-in users. Make sure this is enabled so you don't undercount conversions that happen across devices.

Create a measurement dashboard that shows your full funnel in one view. This should display: ad spend by campaign, impressions and reach, clicks and CTR, landing page views, conversion events by stage (leads, trials, purchases), conversion rates at each stage, cost per conversion by stage, and total revenue and ROAS. When you can see the entire journey, you can identify exactly where optimization is needed, and leveraging PPC automation tools can help you manage and optimize these complex funnels more efficiently.

Test your tracking regularly. Run test purchases or conversions to verify that events fire correctly and data flows to all systems. Tracking breaks more often than you'd think—platform updates, website changes, tag manager issues. Monthly tracking audits prevent you from making decisions on incomplete data.

Document your tracking setup in a technical specification document. Include: what pixels are installed where, what conversion events are tracked, what values are passed, how systems connect, and who has access to what data. This documentation is invaluable when troubleshooting issues or onboarding new team members.

Step 4: Analyze Performance Patterns (Find What Actually Drives Results)

Now that you're tracking the right metrics with clean data, the real work begins: identifying the patterns that separate winning campaigns from losers. This is where measurement transforms from reporting to optimization.

Start with cohort analysis. Don't just look at overall performance—break it down by cohorts (groups with shared characteristics). Analyze performance by: traffic source (Meta vs. Google vs. other), audience type (cold vs. warm vs. retargeting), creative format (video vs. image vs. carousel), ad copy approach (benefit-focused vs. feature-focused), landing page version, and time period (day of week, time of day, seasonality).

Cohort analysis reveals hidden patterns. You might discover that video ads have a higher Cost Per Click but a lower Cost Per Purchase because they attract more qualified traffic. Or that cold traffic converts poorly initially but has higher lifetime value. These insights are invisible in aggregate data.

Implement winner/loser analysis. For any given metric, sort your campaigns, ad sets, or ads from best to worst performance. Then ask: What do the top 20% have in common? What do the bottom 20% have in common? The patterns that emerge tell you what to do more of and what to eliminate.

Look at your top 5 performing ads by ROAS or Cost Per Purchase. Do they share creative elements (colors, layouts, hooks)? Messaging themes (pain points, benefits, social proof)? Targeting characteristics (demographics, interests, behaviors)? These commonalities are your winning formula. Double down on them.

Now look at your worst 5 performers. What patterns do they share? Are they all targeting cold audiences? Using certain creative formats? Leading with specific messages? These are your losing patterns. Eliminate them ruthlessly. Most advertisers waste budget trying to fix bad campaigns when they should just kill them and reallocate to winners.

Analyze your conversion funnel drop-off points. Where do users exit your funnel? If you're getting clicks but no landing page views, you have a page load issue. If you're getting landing page views but no conversions, you have a landing page or offer problem. If you're getting leads but no purchases, you have a sales process issue. Each drop-off point tells you where to focus optimization.

Track performance trends over time. Is your Cost Per Purchase increasing or decreasing? Is your ROAS improving or declining? Are certain campaigns showing fatigue (declining performance over time)? Trend analysis helps you spot problems before they become crises and opportunities before competitors notice them.

Implement statistical significance testing for major changes. When you test a new creative or audience, don't make decisions based on 10 conversions. Wait until you have enough data for statistical confidence—typically 50-100 conversions per variation. Tools like Google Optimize or platform built-in A/B testing help ensure your decisions are based on real patterns, not random noise.

Create a performance review cadence. Weekly reviews for tactical adjustments (pause poor performers, increase budgets on winners). Monthly reviews for strategic analysis (what patterns emerged, what hypotheses to test next). Quarterly reviews for baseline updates and goal setting. Consistent review rhythms ensure insights turn into action, and using top AI-driven ad creative generation tools can help you quickly test new creative variations based on your performance insights.

Build a hypothesis log. Every time you identify a pattern, create a hypothesis to test. "Video ads outperform static ads for cold traffic" becomes "Test: Allocate 70% of cold traffic budget to video ads and measure impact on Cost Per Purchase." Track these hypotheses and their results. Over time, you build a knowledge base of what works for your specific business.

Share insights across your team. Create a simple weekly insights email or Slack update highlighting: the top performing campaign/ad and why it's winning, the worst performing campaign/ad and why it's failing, one key pattern discovered this week, and one optimization action taken based on data. This keeps everyone aligned on what's working and builds a culture of data-driven decision making.

The goal of pattern analysis isn't to create perfect campaigns—it's to create a continuous improvement system. Each week you learn something new. Each month you eliminate something that doesn't work and amplify something that does. Over time, this compounds into a massive competitive advantage.

Common Measurement Mistakes (And How to Avoid Them)

Even with a solid framework, most advertisers make predictable mistakes that undermine their measurement systems. Here are the most common pitfalls and how to avoid them.

Mistake #1: Tracking too many metrics. When you track 20 metrics, you track nothing. You end up with dashboard paralysis—too much data, no clear action. The fix: Ruthlessly focus on your 3-5 North Star metrics. Everything else is noise. If a metric doesn't directly inform a decision, stop tracking it.

Mistake #2: Ignoring attribution windows. A user might see your ad on Monday, think about it, research alternatives, and purchase on Friday. If you only look at same-day conversions, you're missing most of your results. The fix: Use 7-day click and 1-day view attribution windows as your standard. This captures most conversion journeys without over-attributing.

Mistake #3: Comparing apples to oranges. Comparing cold traffic performance to retargeting performance is meaningless—they serve different purposes. The fix: Always segment your analysis. Compare cold traffic to cold traffic, retargeting to retargeting, video to video. Context matters.

Mistake #4: Making decisions on insufficient data. Changing your campaign after 10 conversions is like flipping a coin twice and declaring it unfair. The fix: Wait for statistical significance. For most tests, that means 50-100 conversions per variation. Patience prevents costly mistakes.

Mistake #5: Forgetting about incrementality. Just because someone clicked your ad and bought doesn't mean the ad caused the purchase. They might have bought anyway. The fix: Run periodic holdout tests where you exclude a small audience segment from ads and compare their conversion rate to your ad-exposed audience. This reveals true incremental impact.

Mistake #6: Ignoring profit margins. A $100 ROAS sounds great until you realize your product costs $90 to fulfill. The fix: Track profit-based metrics, not just revenue-based. Calculate your true Cost Per Acquisition including all costs, and measure against profit margins, not just revenue.

Mistake #7: Over-optimizing for short-term metrics. Optimizing purely for lowest Cost Per Purchase might attract low-value customers with high return rates. The fix: Include lifetime value in your measurement framework. A higher upfront acquisition cost is fine if those customers have higher LTV.

Mistake #8: Trusting platform reporting blindly. Ad platforms have incentives to show favorable results. They may over-attribute conversions or use generous attribution windows. The fix: Implement independent tracking through Google Analytics 4 or your own analytics system. Compare platform reporting to independent tracking regularly.

Mistake #9: Not accounting for external factors. Your Cost Per Purchase might spike because your competitor launched a sale, or drop because you were featured in the news. The fix: Maintain a campaign log noting external factors (competitor actions, PR, seasonality, product changes). This context prevents misinterpreting data.

Mistake #10: Analysis paralysis. Spending hours analyzing data but never taking action is worse than not measuring at all. The fix: Set a decision-making threshold. If a campaign is 20% above your target Cost Per Purchase after reaching significance, pause it. If it's 20% below, scale it. Action beats perfect analysis.

The key to avoiding these mistakes is building systems, not relying on discipline. Create dashboards that show the right metrics. Set up automated alerts for performance thresholds. Establish decision-making frameworks that remove ambiguity. When measurement is systematic, mistakes become rare.

Advanced Measurement Techniques (For Scaling Past $50K/Month)

Once you've mastered the fundamentals, these advanced techniques help you optimize at scale and extract maximum value from your ad spend.

Multi-touch attribution modeling. Basic attribution gives all credit to the last click, but most customer journeys involve multiple touchpoints. A user might see a Facebook ad, click a Google ad, and then convert via email. Multi-touch attribution distributes credit across touchpoints based on their influence. Tools like Google Analytics 4, HubSpot, or dedicated attribution platforms like Rockerbox or Northbeam enable this. The insight: you discover which channels work together to drive conversions, not just which gets the last click.

Customer lifetime value cohort analysis. Instead of measuring just first purchase, track the total value customers generate over time, segmented by acquisition source. You might discover that customers from Google have a lower Cost Per Acquisition but also lower LTV, while Meta customers cost more upfront but generate 3x more revenue over 12 months. This changes your entire acquisition strategy.

Incrementality testing through geo experiments. Run your ads in some geographic regions but not others (matched for similar demographics and behavior). Compare conversion rates between regions. The difference reveals your true incremental impact—how many conversions happened because of your ads vs. how many would have happened anyway. This is the gold standard for measurement but requires significant scale.

Creative element testing with dynamic creative optimization. Instead of testing complete ad variations, test individual elements (headlines, images, calls-to-action) systematically. Platforms like Meta's Dynamic Creative and Google's Responsive Search Ads automate this. The insight: you identify which specific elements drive performance, allowing you to create a library of winning components.

Predictive analytics for budget allocation. Use historical performance data to build models that predict which campaigns, audiences, or creatives will perform best. Allocate budget based on predicted performance, not just historical performance. Tools like Google's Smart Bidding or third-party platforms like Madgicx or Revealbot enable this. The advantage: you scale winners faster and cut losers earlier.

Cross-platform journey analysis. Track how users interact with your ads across multiple platforms before converting. Do they see a Meta ad, then search your brand on Google, then convert via a YouTube ad? Understanding these cross-platform journeys helps you optimize your full marketing mix, not just individual platforms. Tools like Google Analytics 4 with proper UTM tracking or dedicated customer data platforms enable this visibility, and implementing bulk ad launcher tools can help you efficiently test and scale campaigns across multiple platforms simultaneously.

Profit-based bidding strategies. Instead of optimizing for conversions or revenue, optimize for profit. This requires passing profit data (not just revenue) to your ad platforms through conversion values. You might bid more aggressively for high-margin products and less for low-margin products, even if they have similar prices. This maximizes actual business profit, not just revenue or conversion volume.

Audience overlap and saturation analysis. As you scale, you risk showing ads to the same people across multiple campaigns, wasting budget on over-exposure. Use platform tools like Meta's Audience Overlap tool to identify redundancy. Monitor frequency metrics to spot saturation. The fix: consolidate overlapping audiences or implement frequency caps to prevent waste.

These advanced techniques require more sophisticated tracking infrastructure and analytical capabilities. Don't implement them until you've mastered the fundamentals. But once you're spending $50K+ monthly and have solid baseline systems, these techniques unlock the next level of optimization and efficiency.

Building Your Measurement Stack (Tools and Infrastructure)

Effective measurement requires the right tools working together. Here's how to build a measurement stack that provides complete visibility without overwhelming complexity.

Your core measurement stack should include four layers: ad platform tracking (native platform tools), website analytics (independent tracking), conversion tracking (pixel and API), and business intelligence (data aggregation and analysis).

For ad platform tracking, use the native tools provided by each platform. Meta Ads Manager, Google Ads interface, LinkedIn Campaign Manager, etc. These show platform-specific metrics and are essential for day-to-day campaign management. But don't rely on them as your only source of truth—they have incentives to show favorable results.

For website analytics, implement Google Analytics 4 as your independent tracking layer. GA4 provides platform-agnostic data on user behavior, conversion paths, and attribution. Set up conversion events in GA4 that mirror your ad platform events. This allows you to compare platform reporting to independent tracking and identify discrepancies.

For conversion tracking, implement both pixel-based tracking and Conversions API. For Meta, install the Meta Pixel and set up Conversions API through your website platform or a tool like Elevar or Littledata. For Google, implement Google Ads conversion tracking and enhanced conversions. The combination provides the most complete tracking possible given privacy restrictions.

For business intelligence, you need a tool that aggregates data from all sources into a single view. Options include: Google Data Studio (free, good for basic reporting), Supermetrics (mid-tier, connects multiple platforms to Google Sheets or Data Studio), Funnel.io or Windsor.ai (enterprise, comprehensive data aggregation), or custom data warehouses using tools like Stitch or Fivetran feeding into Looker or Tableau.

Start simple and add complexity as needed. A basic stack might be: Meta Ads Manager + Google Ads + Google Analytics 4 + Google Data Studio. This covers 80% of needs for most businesses under $50K monthly spend. As you scale, add more sophisticated tools.

Set up automated reporting dashboards that update daily. Your dashboard should show: total ad spend, spend by platform/campaign, key conversion metrics (leads, purchases, revenue), cost per conversion metrics, ROAS or ROI, and comparison to goals/benchmarks. When your dashboard updates automatically, you spend time analyzing instead of pulling reports.

Implement automated alerts for performance thresholds. Set up notifications when: Cost Per Purchase exceeds your target by 20%, ROAS drops below your threshold, daily spend exceeds budget by 10%, or conversion tracking stops working (no conversions recorded for 24 hours). Alerts catch problems before they waste significant budget.

Create a data governance document that specifies: what tools are used for what purposes, how data flows between systems, who has access to what data, how often data is reviewed, and what to do when tracking breaks. This prevents confusion and ensures consistency across your team.

Budget for measurement tools as a percentage of ad spend. A reasonable benchmark is 2-5% of monthly ad spend allocated to measurement and analytics tools. If you're spending $50K monthly on ads, investing $1,000-2,500 in proper measurement infrastructure is worthwhile. The ROI from better optimization far exceeds the tool costs.

The goal isn't to have the most sophisticated stack—it's to have the right stack for your needs. Start with the basics, ensure they work correctly, then add capabilities as your measurement maturity and budget grow.

Turning Measurement Into Action (The Optimization Cycle)

Measurement without action is just expensive reporting. The real value comes from turning insights into optimizations that improve performance. Here's how to build a systematic optimization cycle.

Establish a weekly optimization routine. Every Monday (or whatever day works for your schedule), review your performance dashboard and take these actions: identify top 3 performing campaigns/ads and increase their budgets by 20-30%, identify bottom 3 performing campaigns/ads and pause them if they've reached statistical significance, review new campaigns launched last week and check if they're trending toward your targets, identify one new test to launch based on patterns observed, and document decisions and rationale in a campaign log.

This weekly routine takes 30-60 minutes but compounds into massive improvements over time. You're continuously shifting budget from losers to winners, which naturally improves overall performance.

Implement a testing calendar. Don't test randomly—plan your tests in advance. Each month, identify 2-3 key hypotheses to test based on your pattern analysis. Schedule these tests, allocate budget, and set success criteria before launching. This prevents ad hoc testing that wastes budget without generating insights.

Use a structured testing framework. For each test, document: the hypothesis (what you're testing and why), the variables (what's different between variations), the success metric (what determines a winner), the required sample size (how much data you need), and the timeline (when you'll make a decision). This structure ensures tests generate actionable insights.

Follow the 70-20-10 budget allocation rule. Allocate 70% of budget to proven winners (campaigns/audiences/creatives you know work), 20% to promising tests (variations of winners with potential for improvement), and 10% to experimental tests (new ideas that might unlock breakthroughs). This balances consistent performance with innovation.

Create a scaling playbook. When you identify a winning campaign, don't just increase the budget randomly. Follow a systematic scaling process: increase budget by 20% every 3 days while monitoring Cost Per Purchase, if performance holds, continue scaling; if it degrades by more than 15%, roll back, duplicate the campaign to new audiences or placements, test variations of the winning creative to prevent fatigue, and document what made it successful for future reference.

Build a creative refresh system. Even winning ads eventually fatigue. Monitor frequency metrics (how many times the average user sees your ad). When frequency exceeds 3-4 for cold audiences or 8-10 for retargeting, performance typically declines. Before that happens, launch new creative variations that maintain the winning elements but feel fresh.

Implement a campaign audit schedule. Monthly, review all active campaigns and ask: Is this campaign still profitable? Does it still align with our goals? Is there a better use for this budget? Could we improve it with a simple change? Pause campaigns that no longer serve a purpose. Consolidate campaigns with overlapping audiences. This prevents budget waste on zombie campaigns that run indefinitely without scrutiny, and leveraging how to use AI to launch ads can help you systematically test and scale new campaigns more efficiently.

Share wins and losses with your team. When you discover something that works, document it and share it. When something fails, share that too. Build a knowledge base of insights that compounds over time. This turns individual learning into organizational learning.

The key to effective optimization is consistency, not perfection. Small improvements compounded weekly create dramatic results over months. A 5% improvement in Cost Per Purchase