You're staring at your Meta Ads Manager at 11 PM on a Wednesday, manually duplicating ad sets for the third time this week. Five headlines to test. Four different images. Three audience segments. That's 60 possible combinations—and you've only created 12 of them over the past two hours.

Meanwhile, your competitor just launched 200 variations in the time it took you to grab coffee.

This is the reality of manual campaign testing in 2026. It's not just slow—it's mathematically impossible to scale. Every hour you spend creating variations is an hour you're not analyzing performance, refining strategy, or actually growing your business. And here's the brutal truth: while you're testing one variable at a time, the algorithm is learning from thousands of signals simultaneously.

The gap between manual testing and AI-powered automation isn't just about speed. It's about the fundamental difference between sequential optimization and parallel intelligence. Manual testing forces you to choose: test headlines OR test audiences OR test placements. Automated campaign testing lets you explore all of them simultaneously, identifying winning combinations you'd never discover through traditional methods.

This guide walks you through the complete transformation—from manual testing chaos to systematic, AI-powered optimization that scales your ad performance without scaling your workload. You'll learn how to identify high-impact testing variables, design intelligent testing matrices, implement automation that gets smarter over time, and scale winning combinations across your entire account.

By the end, you'll have a clear roadmap for implementing automated testing that doesn't just save time—it discovers insights and opportunities that manual testing would miss entirely. Let's walk through how to do this step-by-step.

Picture this: You've just spent three hours meticulously crafting five ad variations. Different headlines, tweaked copy, adjusted CTAs. You launch them with careful budget allocation, set calendar reminders to check performance, and start the waiting game. Meanwhile, your competitor's AI system has already generated 150 variations, tested them across 20 audience segments, identified the top 10 performers, and automatically scaled the winners—all before lunch.

This isn't science fiction. This is the reality gap between manual campaign testing and AI-powered automation in 2026.

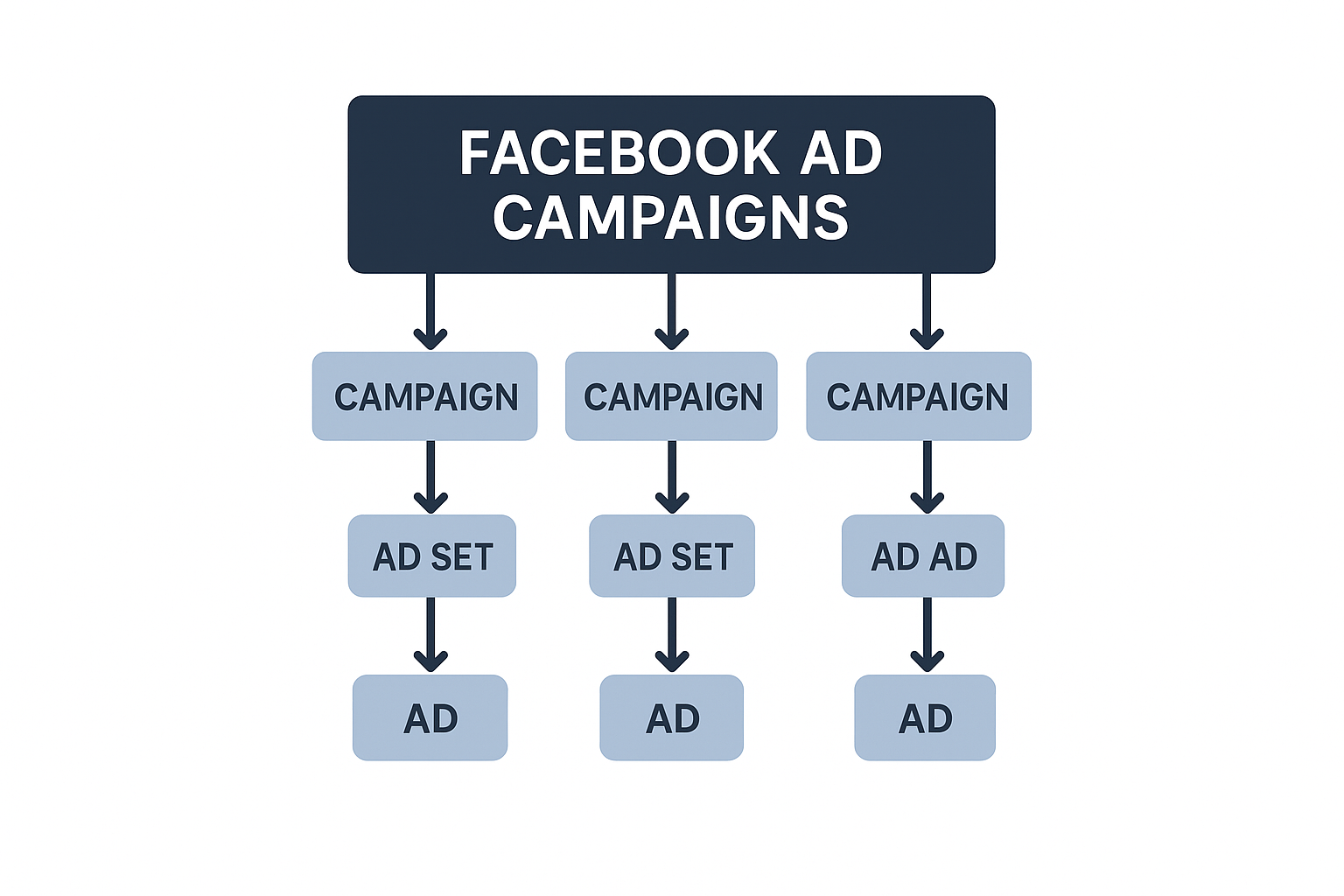

The problem with traditional testing isn't just speed—it's the mathematical impossibility of exploring enough combinations to find true winners. When you're testing 5 headlines, 4 images, and 3 audiences, that's 60 possible combinations. Manual testing forces you to pick maybe 10-15 of those and hope you chose wisely. You're essentially playing roulette with a tiny fraction of the possibilities.

But here's what changes everything: automated campaign testing doesn't just work faster. It thinks differently. While you're testing headlines OR audiences OR placements sequentially, AI explores all of them simultaneously. It identifies patterns you'd never spot manually—like how Headline A performs 40% better with Audience B but underperforms with Audience C, while Headline D does the exact opposite.

These interaction effects are where the real performance gains hide. And they're completely invisible to manual testing because you'd need months and massive budgets to test every combination individually. AI finds them in days.

The transformation isn't just operational—it's strategic. Companies implementing automated testing report discovering winning combinations they never would have thought to test manually. The AI doesn't just execute your testing plan faster; it explores creative territories beyond human intuition, learning from thousands of performance signals to generate increasingly intelligent variations.

This guide walks you through the complete implementation—from identifying which variables actually move the needle, to designing testing matrices that ensure statistical validity, to launching AI systems that get smarter with every campaign. You'll learn how to transform testing from a time-consuming bottleneck into a systematic competitive advantage that compounds over time.

The goal isn't just saving time on ad creation. It's building a testing engine that continuously discovers insights, automatically scales winners, and evolves your advertising strategy faster than competitors can manually iterate. Let's break down exactly how to build that system step-by-step.

Building Your Testing Foundation Like a Pro

Before you launch a single automated test, you need to build the infrastructure that makes intelligent optimization possible. Think of this like preparing a kitchen before cooking—you need the right ingredients, tools, and workspace organized before you start creating.

The difference between automated testing that delivers breakthrough results and automation that just creates expensive noise comes down to foundation quality. AI can only optimize what it can measure, and it can only learn from data it can access.

Essential Requirements and Access Setup

Start with Meta Business Manager admin access. Not editor access—admin. Automation requires the ability to create campaigns, modify budgets, and adjust targeting without manual approval bottlenecks.

If you're new to the platform, understanding how to run Facebook ads at a foundational level ensures you have the baseline knowledge needed to implement automated testing successfully.

Next, verify you have at least 30 days of historical campaign data. This baseline establishes performance benchmarks and gives AI systems enough signal to identify patterns. Without this history, you're asking the system to optimize blind.

Your creative asset library needs organization too. Create folders by format (video, static, carousel), performance tier (proven winners, testing, archive), and campaign objective. When automation kicks in, it needs to pull from organized assets, not dig through chaos.

Data Collection and Organization Framework

Pull performance data from your best campaigns over the past 90 days. You're looking for three specific benchmarks: your average cost per result, your typical click-through rate, and your conversion rate by audience segment.

While manual organization provides the foundation, implementing AI tools for campaign management can automate much of this ongoing data collection and analysis process.

Document your audience segments with actual performance data attached. "Women 25-34 interested in fitness" isn't enough. You need "Women 25-34, fitness interest, engaged with video content in past 30 days, 2.3% CTR, $12 CPA." This specificity feeds better AI decisions.

Create a simple spreadsheet tracking your top 10 performing creatives. Include the headline structure, visual style, call-to-action type, and the specific audience segments where each performed best. These patterns become the template for automated variation generation.

Testing Environment Configuration

Your campaign structure needs to support clear attribution. Use a consistent naming convention: [Objective][Audience][Creative Type][Test Variable][Date]. This format lets you—and your automation system—instantly understand what each campaign tests.

Set up your budget allocation with testing in mind. Reserve 20-30% of your total ad spend specifically for testing new variations. This dedicated budget prevents testing from cannibalizing your proven performers while ensuring you have enough data to reach statistical significance.

Configure your conversion tracking with granular event parameters. Don't just track "Purchase"—track "PurchaseProductCategory" and "PurchaseValueRange." This detail helps AI understand not just what converts, but what converts profitably.

The foundation work feels tedious, but it's what separates campaigns that scale profitably from those that burn budget without learning anything useful.

Step 1: Identify Your High-Impact Testing Variables

Here's the truth about campaign testing that nobody talks about: most advertisers waste 80% of their testing budget on variables that move the needle by maybe 2%. Meanwhile, the variables that could double their performance sit untested because they seem "too obvious" or "already optimized."

The difference between mediocre testing and transformative results comes down to one thing: knowing which variables actually matter for your specific campaigns. Not what worked for someone else's business. Not what the latest marketing guru says you should test. What will genuinely impact your performance.

Let's break down how to identify those high-impact variables systematically.

Creative Element Mapping

Creative components consistently deliver the highest performance variation in Meta campaigns—we're talking 3x to 5x differences between winning and losing combinations. But here's where most advertisers go wrong: they test random creative elements without understanding which components their audience actually responds to.

Start by analyzing your top 10 performing ads from the past 90 days. Look for patterns in headlines—do questions outperform statements? Do benefit-focused headlines beat feature-focused ones? Do emotional triggers generate more engagement than logical appeals? These patterns reveal what resonates with your specific audience.

Rather than manually creating dozens of creative variations, AI ad creation tools can generate multiple headline and copy combinations based on your top-performing content patterns, dramatically accelerating your testing velocity.

Next, categorize your visual assets by format and performance. Video ads, static images, and carousel formats each serve different purposes in the customer journey. A video might excel at cold audience education while static images convert warm audiences more efficiently. Document which formats perform best at each funnel stage—this becomes your creative testing roadmap.

Don't overlook call-to-action variations. "Learn More" versus "Shop Now" versus "Get Started" can shift conversion rates by 30% or more depending on your offer and audience intent. Test CTAs that align with different stages of awareness and purchase readiness.

Audience Segmentation Strategy

Audience targeting represents your second-highest impact testing opportunity, yet most advertisers approach it backwards. They start with broad interest categories and hope for the best. Instead, begin with your existing customer data.

Strategic Facebook ads custom audiences enable you to test specific segments based on behavior, engagement, and purchase history rather than relying solely on interest-based targeting.

Analyze your customer database for hidden patterns. Which demographic segments show the highest lifetime value? Which behavioral indicators predict purchase likelihood? Which engagement patterns separate buyers from browsers? These insights reveal audience segments worth testing that your competitors probably haven't discovered.

Create a testing hierarchy: start with lookalike audiences based on your best customers, then expand to interest-based segments that mirror those characteristics, and finally test broader cold audiences. This systematic approach ensures you're testing audiences with the highest probability of success first.

Don't forget retargeting segmentation. Someone who viewed your product page but didn't purchase requires different messaging than someone who abandoned their cart. Test audience segments based on specific behaviors and engagement levels—this granular approach often uncovers your highest-converting opportunities.

Step 2: Design Your Systematic Testing Matrix

You've identified your high-impact variables. Now comes the part where most advertisers stumble: turning that list into a structured testing plan that actually produces reliable results. The difference between random testing and systematic optimization isn't just organization—it's the scientific methodology that separates real insights from statistical noise.

Here's the challenge: you can't test everything at once without massive budgets, but testing one thing at a time takes forever. The solution? A prioritized testing matrix that maximizes learning while minimizing risk and resource waste.

Variable Prioritization and Impact Assessment

Start by scoring each potential test using a simple impact-versus-effort framework. High-impact variables that require low effort go first. Creative elements typically offer the highest performance variation with moderate implementation effort. Audience tests can deliver significant improvements but require larger sample sizes for statistical validity. Technical settings like bid strategies often provide quick wins with minimal creative investment.

Create a scoring system: rate each variable on impact potential (1-10) and implementation difficulty (1-10). Divide impact by difficulty to get your priority score. A headline test scoring 8 for impact and 3 for difficulty gets a priority score of 2.67. An audience expansion scoring 7 for impact but 8 for difficulty gets 0.88. Test the higher scores first.

Budget allocation follows statistical significance requirements. Each test variation needs enough spend to generate meaningful data—typically at least 50-100 conversions per variation for reliable results. If your average cost per conversion is $20, budget at least $1,000-$2,000 per variation. Testing five headline variations? You're looking at $5,000-$10,000 minimum for statistically valid results.

Control Group Establishment

Every test needs a control—your baseline for measuring improvement. Your control group should represent your current best practices, not your average performance. Use your top-performing campaign from the past 30 days as the foundation.

Document your control group's performance across key metrics: click-through rate, conversion rate, cost per acquisition, and return on ad spend. These become your benchmarks. Any test variation needs to beat these numbers by a meaningful margin—typically 15-20% improvement—to justify scaling.

Isolation is critical. Your control group must run simultaneously with test variations to account for external factors like seasonality, platform changes, or market conditions. Sophisticated advertising manager platforms can orchestrate complex testing sequences, ensuring each test receives adequate budget and runtime while preventing conflicts between simultaneous experiments.

Set your control group budget at 30-40% of your total testing budget. This ensures you're always collecting fresh baseline data while exploring new variations. If performance drops across all variations including your control, you know it's an external factor, not your tests.

Test Sequencing and Resource Management

Sequential testing means running one test at a time—slower but cleaner data. Parallel testing runs multiple tests simultaneously—faster but requires careful variable isolation. The right approach depends on your budget and timeline.

Start with sequential testing for variables that might interact. Test headlines first, identify winners, then test images using those winning headlines. This prevents confounding variables—you'll know whether performance improved because of the new image or the headline change.

Use parallel testing for independent variables. You can test different audience segments simultaneously because they don't interact with each other. This accelerates learning without compromising data quality.

Understanding when to scale ad campaigns becomes critical during this phase—scaling too early wastes budget on unproven variations, while scaling too late means leaving money on the table.

Step 3: Launch AI-Powered Automation with AdStellar

You've identified your testing variables and designed your testing matrix. Now comes the transformation moment—moving from manual execution to AI-powered automation that operates at a scale impossible for human teams.

This is where strategy meets execution velocity.

Platform Integration and Data Sync

Start by connecting AdStellar AI to your Meta Business Manager account. The integration process takes about five minutes and requires admin-level access to ensure the platform can create campaigns, manage budgets, and access performance data in real-time.

Meta advertising automation has evolved from simple rule-based systems to sophisticated AI platforms that can manage complex testing matrices across your entire account. AdStellar's integration pulls your historical campaign data—typically the last 90 days—to establish performance baselines and identify patterns in your existing creative and audience combinations.

During setup, you'll configure permission levels that determine what actions the AI can take autonomously versus what requires your approval. Most advertisers start with automated variation creation and testing, while keeping budget scaling decisions on manual approval until they've built confidence in the system's judgment.

Automated Variation Generation Configuration

Here's where the magic happens. AdStellar analyzes your top-performing campaigns to identify the specific elements that drive results—headline structures, visual patterns, audience characteristics, and messaging frameworks that consistently outperform.

The AI doesn't just randomly combine elements. It recognizes that certain headline types work better with specific images, that particular audience segments respond to different value propositions, and that successful ads share underlying patterns you might not consciously notice.

Configure your variation parameters by setting boundaries: how many headline variations to test per campaign, which audience segments to prioritize, what budget ranges to explore. While various Facebook ads software options offer automation features, AI-powered platforms like AdStellar distinguish themselves through intelligent pattern recognition that improves with every campaign.

The system then generates dozens or hundreds of variations based on your parameters—each one informed by performance data rather than guesswork.

AI Learning System Activation

Once your variations launch, the real intelligence kicks in. AdStellar's AI continuously monitors performance across every active test, feeding real-time data into optimization algorithms that identify winning patterns faster than traditional A/B testing.

The system recognizes when a specific headline-image-audience combination outperforms others and automatically allocates more budget to winners while pausing underperformers. This dynamic optimization happens 24/7, making thousands of micro-adjustments that would be impossible to manage manually.

For advertisers managing campaigns across multiple platforms, exploring automated Instagram ads alongside Facebook automation creates a unified testing approach that maximizes learning across your entire social advertising ecosystem.

Ready to transform your advertising strategy? Get Started With AdStellar AI and be among the first to launch and scale your ad campaigns 10× faster with our intelligent platform that automatically builds and tests winning ads based on real performance data.