A/B testing is one of the most straightforward yet powerful tools in a marketer's arsenal. At its core, it's a simple experiment designed to compare two versions of something—an ad, an email, a landing page—to see which one gets better results. You show your original version (let's call it A) to one slice of your audience and a slightly modified version (B) to another. The audience's behavior tells you which one wins. It’s that simple. This process takes the guesswork out of optimization and replaces it with cold, hard data.

Understanding A/B Testing in Marketing

Think of it like an eye exam. The optometrist shows you two lenses and asks, "Which is better, one or two?" You compare them directly and give a clear answer. They repeat this process, isolating tiny changes until your vision is perfectly sharp.

That’s exactly what A/B testing in marketing does for your campaigns. Version A is your "control"—the original ad or page you're already using. Version B is the "variation," where you've changed just one specific element to see if it makes a difference.

The Power of One Change

The secret sauce to a good A/B test is isolating a single variable. If you test a new headline and a new image at the same time, you'll never know which change actually moved the needle. Was it the compelling new copy or the eye-catching photo? You have no idea.

Instead, you focus on one element at a time, such as:

- A headline: "Save 20% Today" vs. "Get Your Discount Now"

- An image: A clean product shot vs. a lifestyle photo of someone using the product

- A call-to-action (CTA) button: "Learn More" vs. "Get Started Free"

By measuring how users respond—through clicks, sign-ups, or purchases—you let your audience vote for the winner with their actions. This isn't a niche tactic; it's a mainstream strategy. In fact, a whopping 77% of companies A/B test their websites, with landing pages and emails being other popular battlegrounds.

To help you get started, here's a quick rundown of the essential terms you'll encounter.

A/B Testing Core Concepts at a Glance

This table breaks down the fundamental concepts of A/B testing into simple, easy-to-understand terms. Think of it as your cheat sheet for running effective experiments.

| Concept | Role in A/B Testing | Simple Analogy |

|---|---|---|

| Control (Version A) | The original, baseline version of your asset. It's the "champion" you're trying to beat. | Your go-to chocolate chip cookie recipe that you've used for years. |

| Variation (Version B) | The modified version where you've changed one specific element to test its impact. | The same cookie recipe, but this time you swap brown sugar for white sugar. |

| Variable | The single element you are changing between the control and the variation (e.g., headline, CTA button). | The type of sugar in the cookie recipe. Everything else stays the same. |

| Conversion Rate | The percentage of users who complete a desired action (e.g., click, purchase, sign up). | The percentage of friends who say your new cookie is better than the old one. |

| Statistical Significance | The mathematical confidence that the result is due to your change, not random chance. | Getting enough friends to taste-test the cookies so you know the winner wasn't a fluke. |

Each of these concepts plays a critical role in ensuring your tests are structured, reliable, and produce insights you can actually trust.

A/B testing removes ego and intuition from the decision-making process. It’s not about what your team thinks is better; it’s about what the data proves is better, one incremental improvement at a time.

This disciplined approach is the bedrock of conversion rate optimization (CRO). For marketers looking to test multiple variables at once, you might also want to explore the differences between A/B testing and multivariate testing.

Ultimately, A/B testing is about making smarter, data-backed decisions that steadily improve campaign outcomes and maximize your return on investment.

The A/B Testing Framework From Hypothesis to Analysis

A great A/B test isn’t just a random shot in the dark—it’s a disciplined process that turns marketing hunches into predictable science. It’s all about following a clear framework that takes you from an educated guess to a data-backed conclusion, ensuring every tweak you make is actually a step forward.

It all kicks off with a solid hypothesis. This isn't some complex academic paper; it's a simple, testable prediction about what you think will move the needle. A good hypothesis usually looks something like this: "If I change [X], then [Y] will improve because [Z]."

For example, you might hypothesize: "Changing our CTA button from 'Learn More' to 'Get Your Free Trial' will boost sign-ups because the new copy is more specific and screams immediate value." Simple, clear, and ready to test.

Crafting Your Experiment

Once your hypothesis is locked in, it's time to design the experiment. The golden rule here is to isolate a single variable. If you change the headline, the main image, and the CTA all at once, you’ll have no idea which change actually caused the lift—or the drop—in performance. You'll be flying blind.

After you’ve zeroed in on your variable, you need to nail down the nuts and bolts of the test:

- Audience Segmentation: Decide which slice of your audience will see the test. You'll split this group randomly, with half seeing Version A (your control, or the original) and the other half seeing Version B (the new variation).

- Sample Size: You need enough people in your test to get trustworthy results. A test run on just 50 people is basically a coin toss, easily swayed by random chance.

- Test Duration: Let the test run long enough to smooth out any weird fluctuations in user behavior, like the difference between weekday and weekend traffic.

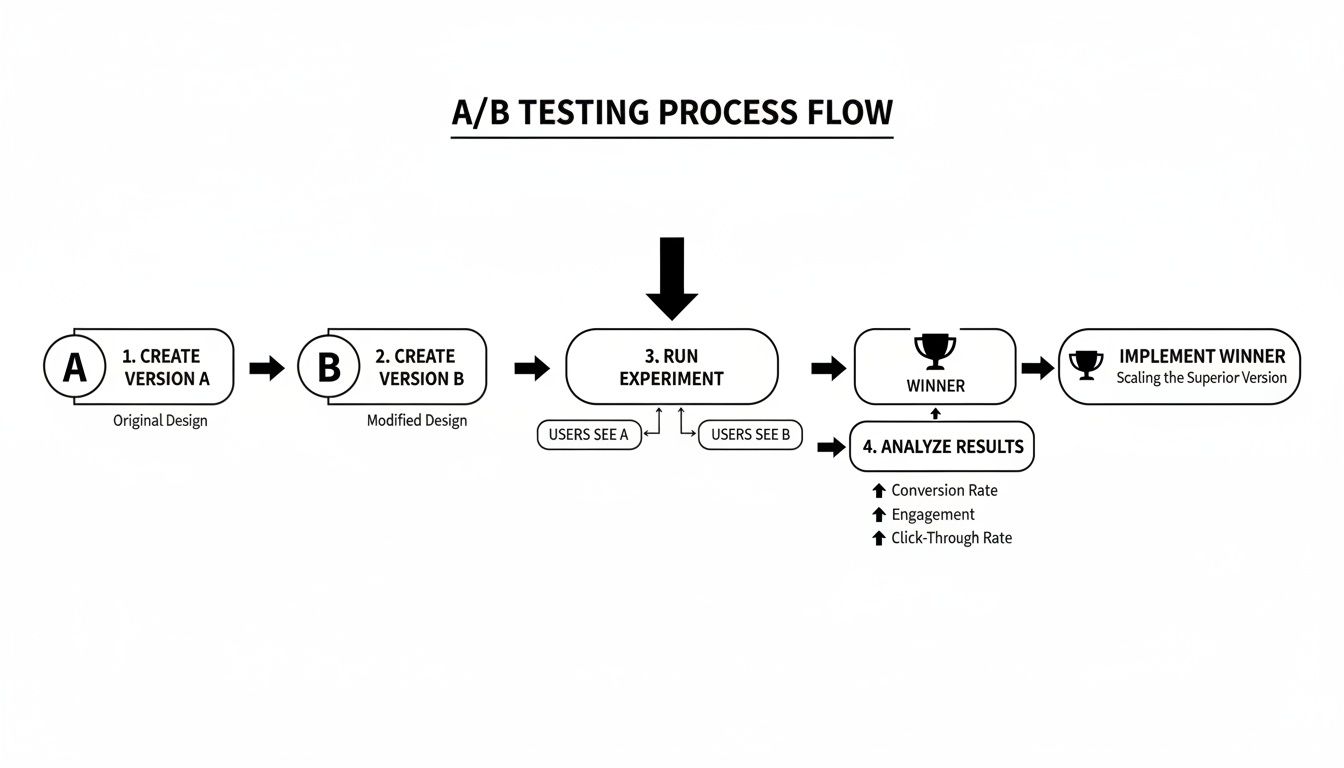

This flowchart breaks down the straightforward path from creating your variations to crowning a clear winner.

As you can see, it’s a simple head-to-head battle where two versions enter, and one comes out on top.

Analyzing the Results

After the test has run its course, it's time to dig into the data. The single most important concept here is statistical significance. This is basically a confidence score (usually a percentage) that tells you if your results are real or just a random fluke. The industry standard is 95% statistical significance, meaning you can be 95% sure the change you made was the true cause of the outcome.

Don’t call a test early just because one version is pulling ahead. You need to wait until you have a big enough sample size and hit statistical significance to make a decision you can actually trust.

This data-driven approach is becoming the norm, with 58% of businesses using A/B testing to fine-tune their conversion rates. And for good reason—UX improvements driven by testing can potentially boost conversions by a mind-blowing 400%. While 44% of companies globally use split testing software, only 7% find it difficult, which shows just how accessible it has become. If you want to nerd out on the numbers, you can read more about key A/B testing statistics. Once you have a winner, check out our guide on how to analyze ad performance for a deeper dive.

Real-World A/B Testing Examples for Paid Social Ads

Theory is one thing, but seeing A/B testing in the wild is where the lightbulbs really go on. Paid social platforms like Meta (Facebook and Instagram) are fantastic labs for this kind of experimentation. They give you powerful targeting tools and crystal-clear metrics, meaning even small, methodical tweaks can deliver a major boost to your campaign results.

Let’s get our hands dirty with some concrete examples of what this looks like day-to-day, whether you're a DTC brand or a B2B company. Just remember the golden rule: isolate a single variable for each test. That’s how you get clean, actionable insights.

H3: Testing Your Ad Creative

Your creative is the first handshake with your audience, making it one of the most powerful levers you can pull. A classic creative test pits two totally different visual styles against each other to see which one stops the scroll and drives action.

- Hypothesis: A raw, user-generated content (UGC) video will outperform a polished studio photograph because it feels more authentic and builds trust faster.

- Variable: The ad creative itself (Video vs. Static Image).

- Metric to Watch: Return on Ad Spend (ROAS) or Click-Through Rate (CTR).

In this test, you'd run two otherwise identical ads. Version A gets the slick studio photo, while Version B gets the UGC video. Everything else—the copy, headline, audience, and budget—must be an exact match.

H3: Optimizing Your Ad Copy and Headline

The words you choose are just as critical as your visuals. A headline or a line of ad copy can completely change how someone perceives your offer.

A great test here is to compare a short, punchy message against a longer, more descriptive one.

- Hypothesis: A headline zeroing in on a specific benefit (like "Launch Ads 10x Faster") will get a lower Cost Per Lead (CPL) than a broader headline (like "Smarter Ad Automation").

- Variable: The ad headline.

- Metric to Watch: Cost Per Lead (CPL) or Conversion Rate.

This type of test helps you get inside your audience's head. Do they want a quick, direct solution, or do they need more context before they’ll commit to a click? A solid paid social media strategy is built on this kind of continuous learning.

Key Takeaway: The goal isn't just to find a "winning ad." It's to understand why it won. Did the audience respond to authenticity, a specific benefit, or a sense of urgency? Each test provides a new insight into your customer's psychology.

We've covered some common tests for elements within your ads. The table below breaks down a few more simple but powerful experiments you can run on platforms like Meta.

Common A/B Tests for Meta Ads

| Test Category | Example Variable to Test (A vs. B) | Primary Success Metric |

|---|---|---|

| Audience Targeting | Broad Audience vs. Lookalike Audience (1%) | Cost Per Acquisition (CPA) |

| Call-to-Action | "Shop Now" vs. "Learn More" | Click-Through Rate (CTR) |

| Ad Format | Single Image Ad vs. Carousel Ad | Engagement Rate / ROAS |

| Offer/Incentive | "15% Off" vs. "Free Shipping" | Conversion Rate |

| Landing Page | Original Product Page vs. Dedicated Campaign Page | Landing Page Conversion Rate |

Each of these tests helps you dial in your campaign one element at a time, moving from educated guesses to data-backed decisions.

While tons of marketers A/B test their ads, there's a huge blind spot when it comes to the post-click experience. Shockingly, only 17% of marketers A/B test their landing pages specifically for their paid traffic, even though 60% of marketers test landing pages in general. This points to a massive gap where campaign performance could be improved by making sure the journey is optimized from ad all the way to conversion. You can discover more insights about advertising strategies on Scube Marketing.

Common A/B Testing Mistakes to Avoid

Knowing what A/B testing is and actually running a great test are two totally different things. The concept is straightforward enough, but a few classic pitfalls can completely tank your results, tricking you into making bad decisions based on junk data. Sidestepping these mistakes is just as critical as setting up the test correctly in the first place.

One of the most common blunders is calling the test too early. You see one variation pulling ahead after a day or two and get that itch to declare a winner and move on. Don't do it. Early results are often just statistical noise, not a real sign of what works. You have to let the test run until it hits statistical significance—usually a 95% confidence level—to be sure the outcome wasn't just a lucky guess.

Testing Too Much at Once

Another classic mistake is trying to test everything at the same time. You change the headline, swap out the main image, and tweak the CTA button color all in one variation. When the test is over, you have no clue which specific change actually caused the lift (or drop) in conversions. Was it the new headline? The image? You'll never know.

A clean A/B test isolates a single variable. This disciplined approach is the only way you’ll learn what truly moves the needle with your audience. If you want to test multiple elements at once, what you're really doing is a multivariate test—a different, more complex type of experiment entirely.

Ignoring Context and Timing

Your test doesn't happen in a bubble. All sorts of external factors can influence user behavior and throw your data for a loop. Running a test over a major holiday, during a massive news event in your industry, or right in the middle of a site-wide sale will give you results that you can't replicate under normal circumstances.

An A/B test is a snapshot of user behavior during a specific period. If that period is unusual, your snapshot will be distorted, providing a misleading picture of what truly works for your audience.

On top of that, don't forget the weekly cycle. How people behave on a Monday morning is wildly different from how they act on a Saturday night. It's crucial to run your test for at least one full week—ideally two—to smooth out these natural ups and downs and get a more accurate picture. A short, three-day test might accidentally favor a variation that only clicks with your weekday crowd.

Some other common errors that will wreck your data include:

- Peeking at results: Constantly checking your data can lead to confirmation bias and tempt you to stop the test before it's truly done. Set it and forget it (for a while).

- Forgetting about segments: A variation might lose overall but be a massive winner with a specific audience, like mobile users or first-time visitors. Dig into the segments.

- Technical glitches: A slow-loading page or a broken link can kill a variation before it ever has a chance. Always, always QA both versions thoroughly. For paid ads, issues like delivery problems can also skew your numbers; you can learn more about why your Facebook ads might not be delivering in our guide.

By steering clear of these common traps, you protect the integrity of your experiment. That way, you can act on the insights you uncover with total confidence.

Scaling Your Testing Efforts with AI and Automation

Setting up A/B tests by hand is a great starting point for any marketing strategy. But that one-by-one approach hits a wall, and it hits it fast. What happens when you want to find the perfect mix of five different headlines, ten unique images, and three calls-to-action?

You’re looking at 5 x 10 x 3, which comes out to 150 different ad variations. Building and keeping tabs on that many tests manually isn't just slow—it's practically impossible for most teams. This is where artificial intelligence and automation completely change the game. They shift your strategy from slow, methodical tests to continuous, high-speed experimentation.

Moving Beyond Simple A-vs-B Comparisons

Modern marketing platforms are built to handle this kind of complexity at scale. Instead of just pitting two versions against each other, these tools can generate hundreds of creative and copy combinations in minutes. This frees your team from the tedious grind of manual setup.

This technology automates the entire experimental workflow, letting you focus on high-level strategy rather than getting buried in the mechanics of each test. The benefits are immediate and clear:

- Rapid Variation Generation: AI can instantly create countless ad variations by mixing and matching your headlines, primary text, images, and audience segments.

- Automated Deployment: Campaigns with hundreds of variations are launched across different audiences with a single click, saving hours of manual work.

- Real-Time Analysis: The system constantly monitors performance data, identifying which combinations are driving the best results without needing a human to watch over it.

This shift turns A/B testing from a periodic project into an always-on optimization engine. AI doesn't just run your tests faster; it finds winning patterns in the data that a human analyst might completely miss.

Unlocking Efficiency and Scalable Growth

The real power here is efficiency. AI-driven platforms act as a force multiplier for your marketing efforts, instantly pinpointing which creative element, copy angle, or audience segment delivers the highest ROAS or lowest CPA. You could learn, for example, that your user-generated videos perform best with a short, punchy headline when targeted to a lookalike audience—and you could learn it fast.

This kind of insight allows you to make smarter budget decisions, cutting spend on what’s not working and doubling down on the proven winners. By automating all the heavy lifting, you turn what was once experimental chaos into campaign clarity. If you're looking to go deeper on integrating AI into your testing, this article on AI-powered A/B testing to optimize sales offers some valuable insights.

For marketers running campaigns on platforms like Meta, this automation is a game-changer. AdStellar’s platform, for instance, is designed to generate and launch these large-scale experiments quickly, giving you clear insights on what works. You can learn more about applying these principles in our guide to using AI for Facebook Ads. This tech-forward approach takes the guesswork out of the equation and unlocks a truly scalable path to growth.

Your Top A/B Testing Questions, Answered

As you start dipping your toes into A/B testing, a handful of questions pop up almost immediately. It’s one thing to understand the theory, but another to put it into practice. Let's clear up the most common hurdles so you can start testing with confidence.

How Long Should My A/B Test Run?

There’s no single magic number here, but a solid rule of thumb is to let your test run for at least one to two full weeks. Why? Because user behavior isn’t the same every day. A busy Monday is worlds away from a quiet Saturday, and running the test over a full business cycle helps smooth out those daily peaks and valleys.

Even more critical, though, is letting the test run long enough to hit statistical significance. This is the point where your testing tool has gathered enough data—visits, clicks, conversions—to confidently say the result isn't just a fluke. Pulling the plug too early is one of the easiest ways to get a misleading result.

What’s a Good Sample Size for a Test?

The "right" sample size really depends on your current conversion rate and how big of a change you’re hoping to see. As a general guideline, try to aim for at least 1,000 visitors and 100 conversions for each variation. Of course, if you don't have a ton of traffic, hitting those numbers can take a while.

The real goal is to collect enough data to make a reliable call. If your traffic is on the lower side, don't waste time testing a tiny button color change. Instead, focus your energy on high-impact changes, like a completely different landing page offer. Small tweaks require a massive sample size to prove they made a difference.

Can I A/B Test More Than One Thing at a Time?

In short, no. The very definition of what A/B testing is in marketing hinges on isolating a single variable. You have one control (A) and one variation (B) where only one thing is different. If you change both the headline and the main image, you’ll have no idea which change actually caused the shift in performance. Was it the new copy, the new photo, or the combination of both? You'll never know for sure.

If you’re itching to test multiple changes at once, you're moving into the realm of multivariate testing. It’s a more complex type of experiment that demands way more traffic to get a clear, reliable result.

Ready to stop guessing and start scaling your ads with data? AdStellar AI automates the entire testing workflow, letting you launch hundreds of ad variations in minutes and automatically find the winning combinations that drive real growth. Discover how to scale your Meta campaigns 10x faster at https://www.adstellar.ai.